Microsoft launches Phi-3-mini, a small AI model that outperforms larger rivals like Mixtral 8x7B and GPT-3.5

In the ever-intensifying competition of generative AI, Microsoft on Tuesday announced the launch of Phi-3 mini, a fresh lineup of compact language models (SLMs) that outshine their larger counterparts, setting new standards for smaller-scale AI models.

The Phi-3 model range comprises three variants: Phi-3-mini, packing 3.8 billion parameters; Phi-3-small, with 7 billion parameters; and Phi-3-medium, boasting 14 billion parameters. Despite its smaller size, Phi-3-mini stands toe-to-toe with Mixtral and GPT 3.5 in benchmarks, flaunting an impressive 128k context window.

“We introduce phi-3-mini, a 3.8 billion parameter language model trained on 3.3 trillion tokens, whose overall performance, as measured by both academic benchmarks and internal testing, rivals that of models such as Mixtral 8x7B and GPT-3.5 (e.g., phi-3-mini achieves 69% on MMLU and 8.38 on MT-bench), despite being small enough to be deployed on a phone,” Microsoft said in the Phi-3 Technical Report research paper.

What’s striking about Phi-3-mini is not just its performance, but also its ability to be deployed on-device without compromising quality or cost-effectiveness, thanks to its 3.8 billion parameters. Meanwhile, Phi-3-small and Phi-3-medium, with 7 billion and 14 billion parameters respectively, are still undergoing training and are slated for release soon.

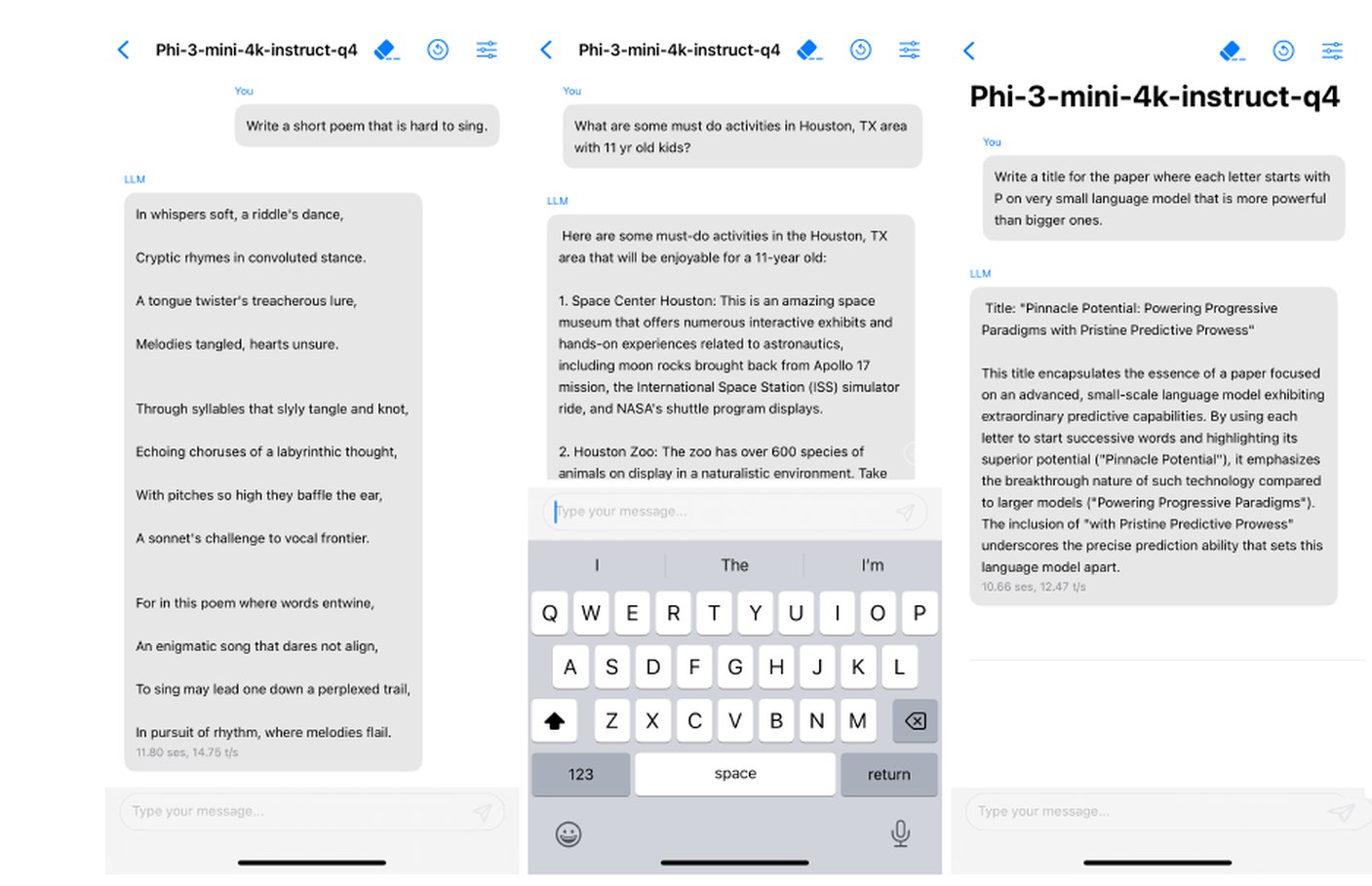

4-bit quantized phi-3-mini running natively on an iPhone with A16 Bionic chip, generating over 12 tokens per second. (Source: Microsoft)

Why This Matters:

Phi-3-mini’s achievement is noteworthy as it surpasses much larger models on various benchmarks, including GPT-3.5 and Mixtral 8x7B, despite having significantly fewer parameters. This is crucial because smaller models typically offer faster processing, lower operational costs, and demand less computational resources.

Phi-3-mini is accessible on Microsoft’s Azure cloud platform, as well as on open-source platforms like Hugging Face and Ollama. Meanwhile, the forthcoming Phi-3-small and Phi-3-medium models from the Phi-3 family are anticipated to further broaden the landscape.

Microsoft’s unveiling underscores that, with appropriate training data and methodologies, small language models can pack a hefty punch. Particularly, the capabilities of Phi-3-mini represent a major breakthrough, paving the way for high-performance models to efficiently operate on our mobile devices.

The launch of Phi-3-mini comes just a month after Microsoft hired DeepMind co-founder Mustafa Suleyman to run its newly formed consumer AI unit dubbed Microsoft AI.