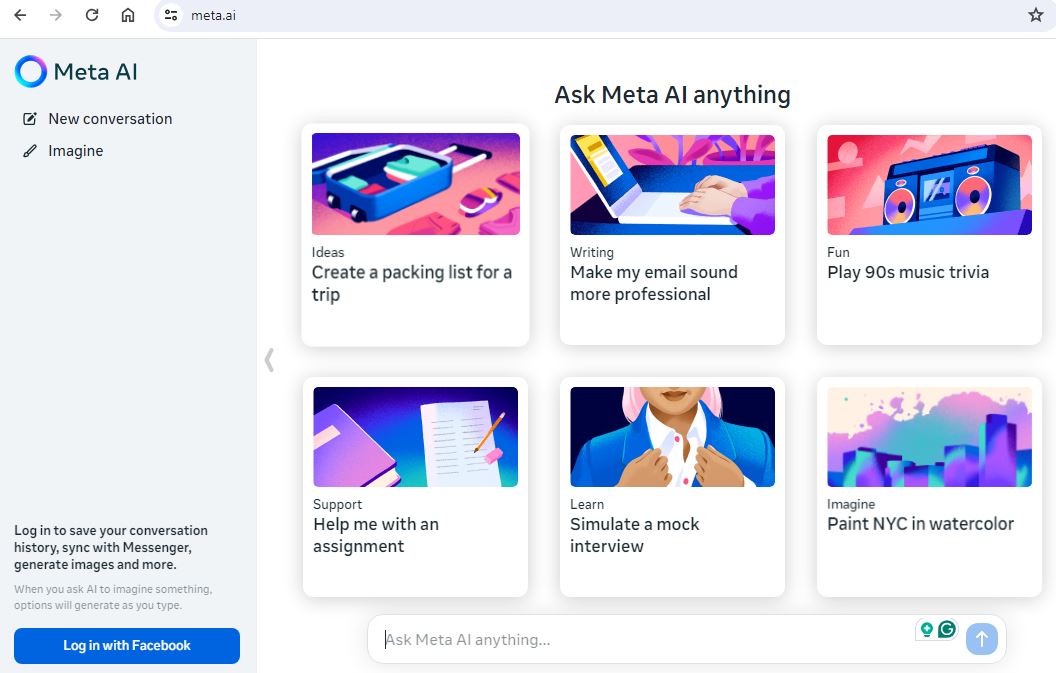

Meta launches Meta.AI to compete with ChatGPT

Meta has just unveiled Meta.AI, an AI assistant powered by its state-of-the-art Llama 3 technology. This launch is widely interpreted as a direct challenge to the dominance of OpenAI’s ChatGPT, signaling a shift towards a higher standard for AI-mediated communication.

The introduction of Meta.AI closely follows the debut of Llama 3, Meta’s latest iteration of its open-source LLM framework, featuring versions boasting 8 billion and 70 billion parameters. These iterations have demonstrated superior performance in various evaluation benchmarks, solidifying Meta’s position as a formidable contender in the field.

What sets Meta’s approach apart is its commitment to open-source development. In contrast to certain competitors, Meta has opted to make the foundational Llama 3 model accessible to the public, fostering an environment conducive to experimentation, innovation, and collaborative progress among developers. However, there may be constraints imposed on large cloud corporations seeking access to the model.

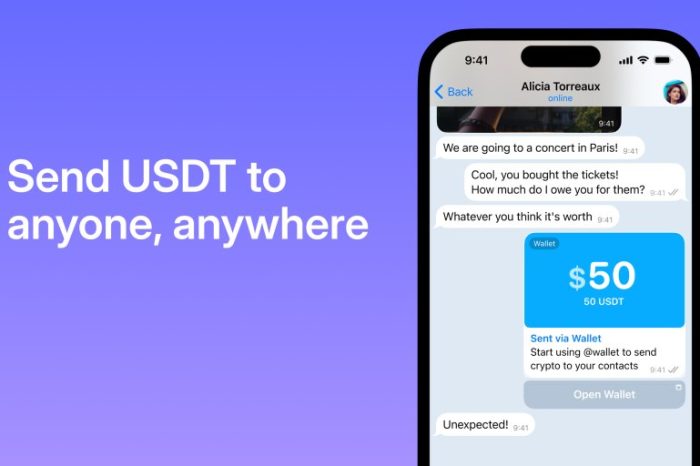

Meta.AI also enjoys a strategic advantage in terms of accessibility. By seamlessly integrating the AI assistant with Meta’s established social media platforms such as Facebook, Instagram, and WhatsApp, Meta ensures widespread availability to an extensive user base. This integration holds the promise of revolutionizing user interactions on these platforms, offering assistance with tasks, facilitating content creation, and delivering tailored experiences.

From a technical standpoint, Llama 3 presents two versions – an 8 billion parameter iteration and a 70 billion parameter variant – both prioritizing efficiency while delivering robust performance across diverse tasks. Meta has additionally hinted at the prospect of developing even more potent models in the future.

Llama 3 and Meta.AI

According to a recent company announcement, the latest Llama 3 models have been trained on an impressive dataset of over 15 trillion tokens, dwarfing the previous generation’s training corpus of 2 trillion tokens. This exponential increase in training data underscores the substantial advancement in model capabilities.