Nvidia unveils H200 GPU, the world’s most powerful AI chip for training large language models

Nvidia On Monday unveiled the H200, its latest graphics processing unit (GPU) designed specifically for training and deploying advanced artificial intelligence models, a crucial component in the current boom of generative AI.

This new GPU, which is based on NVIDIA Hopper architecture, represents an upgrade from its predecessor, the H100, famously used by OpenAI for training its advanced language model, GPT-4. The demand for these chips is intense, with large companies, startups, and government agencies all competing for a limited supply.

The news comes a month after the chipmaker giant hinted at a shift to a one-year release pattern due to high demand, indicating the introduction of the B100 chip, based on the forthcoming Blackwell architecture, in 2024. Dubbing it “the world’s leading AI computing platform, Nvidia said in a news release:

“The NVIDIA H200 is the first GPU to offer HBM3e — faster, larger memory to fuel the acceleration of generative AI and large language models, while advancing scientific computing for HPC workloads. With HBM3e, the NVIDIA H200 delivers 141GB of memory at 4.8 terabytes per second, nearly double the capacity and 2.4x more bandwidth compared with its predecessor, the NVIDIA A100.”

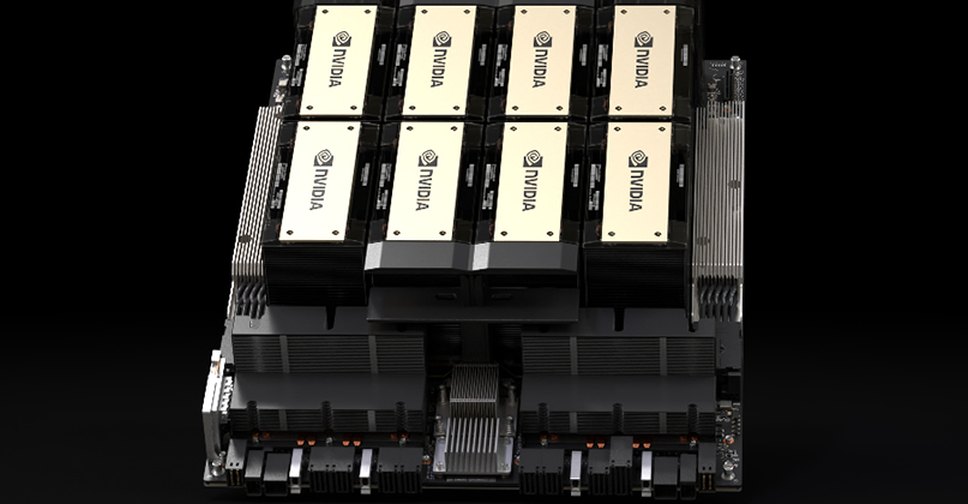

Nvidia H200 AI chip

According to estimates from Raymond James, the new H100 chips will cost between $25,000 and $40,000. The firm also suggests creation of the most significant models involves thousands of these chips working together in a process known as “training.” The soaring interest in Nvidia’s AI GPUs has propelled the company’s stock up by more than 230% in 2023, and it anticipates approximately $16 billion in revenue for the fiscal third quarter, reflecting a 170% increase from the previous year.

“To create intelligence with generative AI and HPC applications, vast amounts of data must be efficiently processed at high speed using large, fast GPU memory,” said Ian Buck, vice president of hyperscale and HPC at NVIDIA. “With NVIDIA H200, the industry’s leading end-to-end AI supercomputing platform just got faster to solve some of the world’s most important challenges.”

The notable improvement with the H200 lies in its inclusion of 141GB of next-generation “HBM3” memory, enhancing the chip’s performance in “inference”—the utilization of a trained model to generate text, images, or predictions. Nvidia claims that the H200 will deliver output nearly twice as fast as the H100, as demonstrated in a test using Meta’s Llama 2 LLM.

Expected to ship in the second quarter of 2024, the H200 will be a competitor to AMD’s MI300X GPU. Similar to the H200, AMD’s chip boasts additional memory over its predecessors, facilitating the accommodation of large models for running inference.

Nvidia emphasizes compatibility, stating that the H200 will work seamlessly with the H100. This means that AI companies already training with the earlier model won’t need to overhaul their server systems or software to incorporate the new version. The H200 will be available in four-GPU or eight-GPU server configurations on Nvidia’s HGX complete systems, as well as in a chip called GH200, combining the H200 GPU with an Arm-based processor.

However, the reign of the H200 as the fastest Nvidia AI chip may be short-lived. While Nvidia offers various chip configurations, new semiconductors typically make significant leaps about every two years when manufacturers shift to a different architecture. Both the H100 and H200 are based on Nvidia’s Hopper architecture.