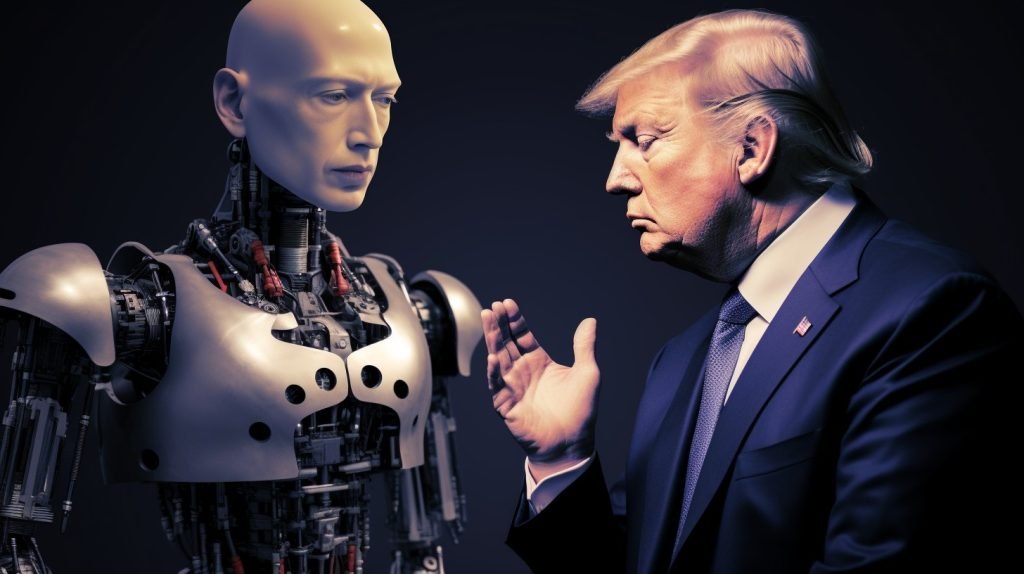

Meta will start requiring advertisers to disclose the use of AI in political ads

Political ads generated by artificial intelligence won’t be making their way onto Facebook and Instagram, all thanks to a new policy introduced by Meta Platforms, the parent company of Facebook.

Meta announced on Wednesday that starting in 2024, advertisers will need to be transparent about the use of artificial intelligence (AI) or other digital techniques when modifying or creating political, social, or election-related ads on Facebook and Instagram, Reuters reported.

In a blog post, Meta, also the world’s second-largest digital advertising platform, said that advertisers must indicate if their modified or created ads depict real individuals saying or doing things they haven’t actually done, or if they create lifelike images of people who don’t exist. In subsequent updates on its help center, Meta reiterated its stance following the initial announcement:

“As we continue to test new Generative AI ads creation tools in Ads Manager, advertisers running campaigns that qualify as ads for Housing, Employment or Credit or Social Issues, Elections, or Politics, or related to Health, Pharmaceuticals or Financial Services aren’t currently permitted to use these Generative AI features,” Meta wrote.

In addition, the company said it plans to request advertisers to disclose if their ads depict events that never occurred, manipulate footage of a real event, or portray an actual event without using a genuine image, video, or audio recording of the event.

These policy updates, along with Meta’s earlier decision to prohibit political advertisers from utilizing generative AI ad tools, emerged a month after Meta announced its initiative to broaden advertisers’ access to AI-powered advertising tools. These tools enable the instant creation of backgrounds, image alterations, and variations of ad copy based on simple text prompts.

Meanwhile, Google, the largest digital advertising company under Alphabet, recently introduced similar image-customizing generative AI ad tools. The company intends to maintain a political barrier within its products by prohibiting a set of “political keywords” from being used as prompts.

In the U.S., legislators have expressed concerns about the use of AI to produce content that falsely portrays candidates in political ads, potentially influencing federal elections. The emergence of various new “generative AI” tools has made it convenient and inexpensive to generate persuasive deepfakes.

Meta has already taken steps to prevent its user-facing Meta AI virtual assistant from creating lifelike images of public figures. Nick Clegg, the company’s top policy executive, noted last month the necessity to update rules concerning the use of generative AI in political advertising.

However, the Facebook parent company said its new policy won’t mandate disclosures when digital content is “inconsequential or immaterial to the claim, assertion, or issue raised in the ad.” This exemption includes adjustments like image size, cropping, color correction, or image sharpening.”