Artists can now fight back against AI with new data poisoning tool Nightshade

The launch of ChatGPT late last year by OpenAI marked a pivotal moment in the field of artificial intelligence (AI) which ushered in a new exciting era. The introduction of ChatGPT and other generative AI chatbots also empowered millions across the globe with the incredible capability to accomplish tasks that were previously considered unattainable without the assistance of AI.

However, this dual nature of AI is becoming increasingly evident. On one hand, it acts as a catalyst for creativity and a potent tool for boosting productivity. On the other hand, it has also served as a double-edged sword, where it’s been harnessed to exploit the original works of artists and creators, raising important moral, ethical, and creative concerns. So, as the boundaries between art and technology are increasingly blurred, artists face a mounting challenge: the unauthorized use and exploitation of their creative works by generative AI.

Over the years, artists have watched their creations taken, altered, and profited from without their permission, leaving them in search of ways to safeguard their intellectual property. In a bid to counter the unauthorized use of their artwork by AI companies, artists now have access to a new tool called Nightshade that enables them to fight back against generative AI.

Nightshade offers artists a newfound sense of security and allows them to reclaim control over their artistic vision and defend against the intrusion of AI-driven theft by providing a shield to protect their creativity. The tool also provides a renewed sense of security and protection for creators in the digital age.

According to a recent piece published in MIT Tech Review, Nightshade is a data poisoning tool that disrupts AI training data in a manner that has the potential to inflict significant harm on image-generating AI models. That’s not all. Nightshade also enables artists to “add invisible changes” to the pixels in their digital art prior to uploading it online. By doing so, if their work is incorporated into an AI training dataset, it can disrupt the resulting model in unpredictable and chaotic ways.

The intention behind Nightshade is to combat AI companies that employ artists’ creations for training their models without obtaining the creator’s consent. By essentially “poisoning” this training data, the tool can potentially harm future versions of image-generating AI models, including the likes of DALL-E, Midjourney, and Stable Diffusion. This would result in outputs that are nonsensical, where dogs might morph into cats, cars into cows, and so forth. This research, which MIT Technology Review had an exclusive preview of, has been submitted for peer review at the Usenix computer security conference.

In recent months, AI companies, such as OpenAI, Meta, Google, and Stability AI, have faced a barrage of lawsuits from artists who contend that their copyrighted content and personal information were scraped without permission or compensation. Ben Zhao, a professor at the University of Chicago, who led the team behind Nightshade, believes that this tool could help tip the balance of power back in favor of artists. It serves as a potent deterrent against disregarding artists’ copyright and intellectual property. At present, there has been no response from Meta, Google, Stability AI, and OpenAI to MIT Technology Review’s request for comments on how they might react to this development.

Furthermore, Zhao’s team has also created another tool called Glaze, which permits artists to “mask” their distinctive personal style, preventing it from being exploited by AI companies. It functions in a manner similar to Nightshade by subtly altering image pixels in ways imperceptible to the human eye but capable of leading machine-learning models to interpret the image differently from its actual content.

How Nightshade Works

Nightshade takes advantage of a security vulnerability within generative AI models, a vulnerability stemming from their training on extensive datasets, often comprised of images scraped from the internet. Nightshade intervenes with these images.

For artists concerned about uploading their work online without risking it being collected by AI companies, there’s a solution offered by Glaze. It allows artists to upload their creations and overlay them with a different artistic style, effectively masking the original. Additionally, artists can choose to deploy Nightshade in this process. This strategic move muddles the waters for AI developers who scrape the internet to source data, whether it’s to refine existing AI models or build new ones. The poisoned data infiltrates the model’s dataset and induces it to malfunction.

Poison GPT

These poisoned data samples have the power to skew models into learning unusual associations; for instance, they might interpret images of hats as cakes or images of handbags as toasters. Removing this tainted data is a challenging task, often requiring tech companies to painstakingly locate and eliminate each corrupted sample.

Researchers put this attack to the test on Stable Diffusion’s latest models and an AI model they constructed from scratch. By introducing just 50 poisoned dog images to Stable Diffusion and instructing it to generate more dog images, the results turned bizarre, with creatures featuring extra limbs and cartoon-like faces. With 300 poisoned samples, an attacker could manipulate Stable Diffusion to generate images of dogs that resembled cats.

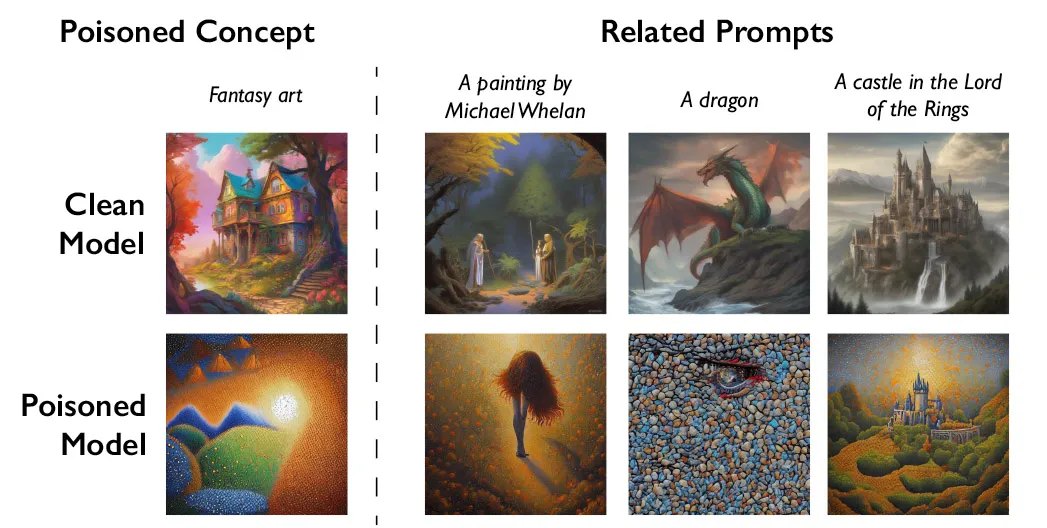

COURTESY OF THE RESEARCHERS

Generative AI models are adept at forming associations between words, which is exploited by Nightshade, the data poisoning tool. Nightshade doesn’t just tamper with individual words like “dog” but extends its impact to related concepts such as “puppy,” “husky,” and “wolf.” Furthermore, this poisoning technique is not limited to exact matches; it also influences tangentially related images. For instance, if a model encounters a poisoned image under the “fantasy art” prompt, it can subsequently distort responses to prompts like “dragon” or “a castle in The Lord of the Rings.”

While there’s concern that this data poisoning technique could potentially be misused for malicious purposes, it’s important to note that causing substantial harm to larger, more robust AI models would require thousands of poisoned samples. These models typically train on billions of data samples. Experts emphasize the need for developing defenses against such attacks as they could become a looming threat in the near future. According to Vitaly Shmatikov, a professor at Cornell University specializing in AI model security, now is the time to focus on building safeguards to protect against these vulnerabilities.

“We don’t yet know of robust defenses against these attacks. We haven’t yet seen poisoning attacks on modern [machine learning] models in the wild, but it could be just a matter of time,” says Vitaly Shmatikov, a professor at Cornell University who studies AI model security told MIT Technology Review. “The time to work on defenses is now,” Shmatikov added.