Nvidia unveils new chips for its “EOS” supercomputer and Omniverse Platform, calls it’s the world’s fastest AI supercomputer

Tech and gaming giant NVIDIA today unveiled new chips and technologies to speed up artificial intelligence (AI) computing. Nvidia said the new chips and technologies will boost the computing speed of increasingly complicated AI algorithms as the company steps up competition against rival chipmakers in the data center space. The announcements were made at Nvidia’s AI developers conference online.

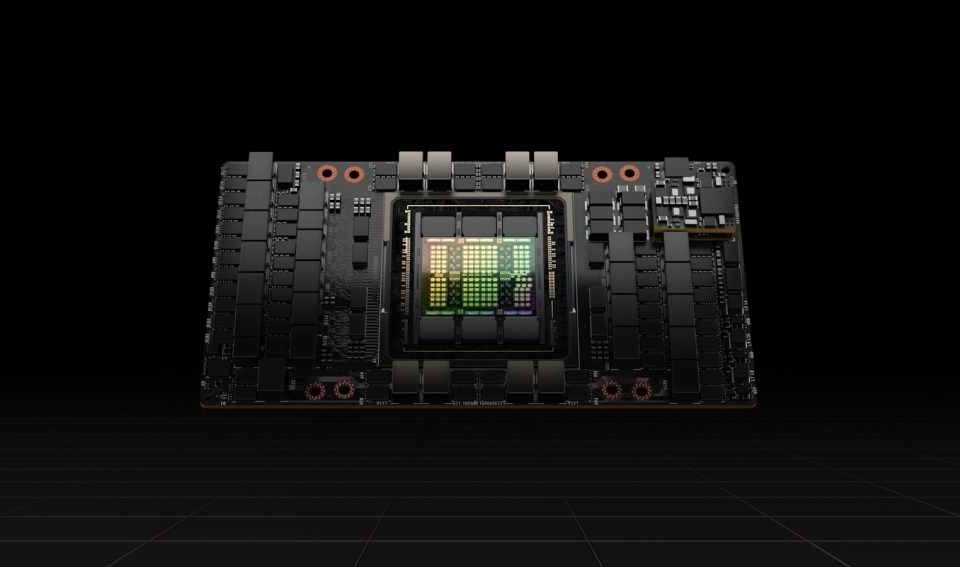

To power the next wave of AI data centers, NVIDIA unveiled its next-generation accelerated computing platform with NVIDIA Hopper™ architecture, delivering an order of magnitude performance leaps over its predecessor. Named after Grace Hopper, a pioneering U.S. computer scientist, the new architecture succeeds the NVIDIA Ampere architecture, launched two years ago.

In addition to the accelerated computing platform, Nvidia also announced its first Hopper-based GPU, the NVIDIA H100, packed with 80 billion transistors. In a keynote address delivered by NVIDIA CEO Jensen Huang, Nvidia says H100 as the world’s largest and most powerful accelerator. Nvidia said its supercomputer will run at over 18 exaflops.

The company also presents the latest breakthroughs in AI, data science, high-performance computing, graphics, edge computing, networking, and autonomous machines. The company added that the new chips will also help power its omniverse platform.

“Data centers are becoming AI factories — processing and refining mountains of data to produce intelligence,” said Jensen Huang, founder and CEO of NVIDIA. “NVIDIA H100 is the engine of the world’s AI infrastructure that enterprises use to accelerate their AI-driven businesses.”

Nvidia’s graphic chips (GPU), which initially helped propel and enhance the quality of videos in the gaming market, have become the dominant chips for companies to use for AI workloads. The latest GPU, called the H100, can help reduce computing times from weeks to days for some work involving training AI models, the company said.

In addition to the new chips, NVIDIA also announced the launch of Omniverse Cloud to connect tens of millions of designers and creators. The Omniverse Cloud includes Nucleus Cloud, a simple “one-click-to-collaborate” sharing tool that enables artists to access and edit large 3D scenes from anywhere, without having to transfer massive datasets.

“Designers working remotely collaborate as if in the same studio. Factory planners work inside a digital twin of the real plant to design a new production flow. Software engineers test a new software build on the digital twin of a self-driving car before releasing it to the fleet. A new wave of work is coming that can only be done in virtual worlds,” said Jensen Huang, founder and CEO of NVIDIA. “Omniverse Cloud will connect tens of millions of designers and creators, and billions of future AIs and robotic systems.”

Nvidia said that the H100 chip will be produced on Taiwan Manufacturing Semiconductor Company’s cutting edge four-nanometer process with 80 billion transistors and will be available in the third quarter, Nvidia said.

The H100 will also be used to build Nvidia’s new “Eos” supercomputer, which Nvidia said will be the world’s fastest AI system when it begins operation later this year.

Meanwhile, Nvidia is not the only company working on an AI supercomputer. In January, Facebook’s Meta Platforms said it would build the world’s fastest AI supercomputer this year and it would perform at nearly 5 exaflops.

Exaflop performance is the ability to perform 1 quintillion – or 1,000,000,000,000,000,000 – calculations per second.

In addition to the GPU chip, Nvidia introduced a new processor chip (CPU) called the Grace CPU Superchip that is based on Arm technology. It’s the first new chip by Nvidia based on the Arm architecture to be announced since the company’s deal to buy Arm Ltd fell apart last month due to regulatory hurdles.

The Grace CPU Superchip, which will be available in the first half of next year, connects two CPU chips and will focus on AI and other tasks that require intensive computing power.

You can watch the entire announcement below.