Meet Arnav Kapur, a student at MIT’s Media Lab who can surf the internet with his mind

Earlier today, Elon Musk presented a live demo of Neuralink V0.9 that shows a brain-machine interface in action. During the press conference, the Neuralink team showcased a future technology that could help people deal with brain or spinal cord injuries or controlling 3D digital avatars. Neuralink is a device that can make anyone superhuman by connecting their brains to a computer.

Neuralink is not alone. For more than 30 years, MIT has been recruiting the brightest minds to work in its Media Lab, where life-changing inventions are created. One of those ideas is a mind-reading device that translates silent thoughts into speech.

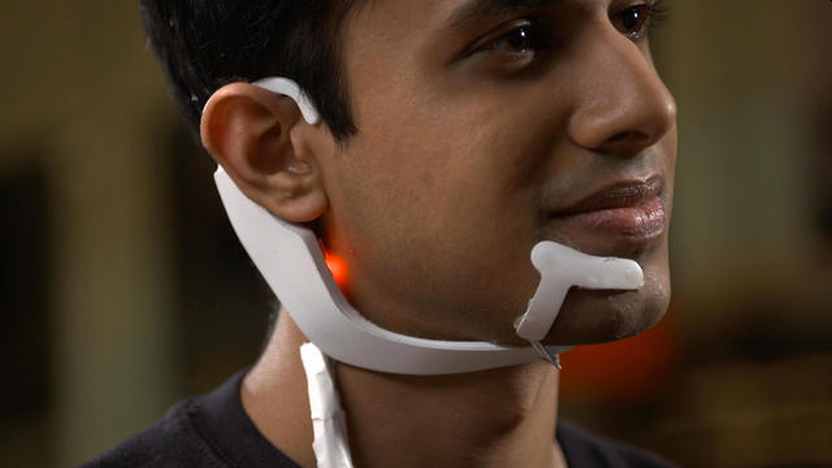

Arnav Kapur is a 25-year old graduate student and an MIT Media Lab researcher. Kapur has developed a technology to connect the human brain to the internet. For the past several years, Kapor has been developing a new AI-enabled headset device called the AlterEgo.

According to the information at MIT Media Lab, the AlterEgo headset is able to detect, via powerful sensors, the signals the brain sends to internal speech mechanisms, like the tongue or larynx, when you speak to yourself. Imagine asking yourself a question but not actually saying the words aloud. Even if you don’t move your lips or your face, your internal speech system is still doing the work of forming that sentence.

In an interview with “60 Minute,” Kapur silently Googles questions and hears answers through vibrations transmitted through his skull and into his inner ear. During the interview, ’60 Minutes’ correspondent Scott Pelley tested Kapur’s system that allows users to surf the internet with their minds.

Below is the video.

Below is a transcript of the interview.

Arnav Kapur: What happens is when you’re reading or when you’re talking to yourself, your brain transmits electrical signals to your vocal cords. You can actually pick these signals up and you can get certain clues as to what the person intends to speak.

Scott Pelley: So the brain is sending an electrical signal for a word that you would normally speak but your device is intercepting that signal?

Arnav Kapur: It is

Scott Pelley: So instead of speaking the word, your device is sending it into a computer.

Arnav Kapur: That’s correct.

Scott Pelley: That’s unbelievable. Let’s see how this works.

So we tried him.

Scott Pelley: What is 45,689 divided by 67?

Arnav Kapur: Sure.

He silently asks the computer and then hears the answer through vibrations transmitted through his skull and into his inner ear.

Arnav Kapur: Six eight one point nine two five.

Exactly right.

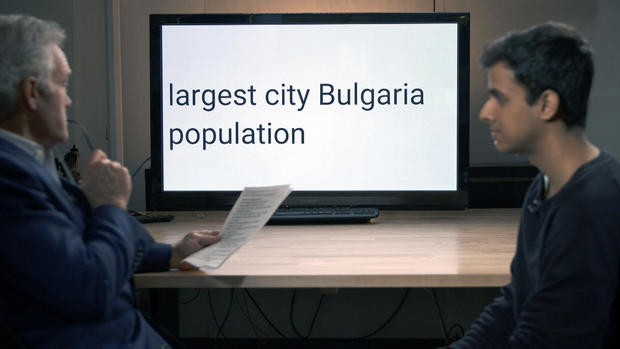

Scott Pelley: One more. What’s the largest city in Bulgaria and what is the population?

The screen shows how long it takes the computer to read the words that he’s saying to himself.

Arnav Kapur: Sofia, 1.21 million.

Scott Pelley: That is correct. You just Googled that.

Arnav Kapur: I did.

Scott Pelley: You could be an expert in any subject. You have the entire internet in your head.

Arnav Kapur: That’s the idea.

Ideas are the currency of MIT’s Media Lab. The lab is a six-story tower of Babel where 230 graduate students speak dialects of art, engineering, biology, physics and coding, all translated into innovation.

Hugh Herr: The Media Lab is this glorious mixture, this renaissance, where we break down these formal disciplines and we mix it all up and we see what pops out. That’s the magic, that intellectual diversity.

Hugh Herr is a professor who leads an advanced prosthetics lab.

Scott Pelley: And what do you get from that?

Hugh Herr: You get this craziness. When you put, like, a toy designer next to a person that’s thinking about what instruments will look like in the future next to someone like me, that’s interfacing machines to the nervous system, you get really weird technologies. You get things that no one could’ve have conceived of.

You can watch Full Episodes of “60 Minutes” HERE.