Samsung’s new AI algorithms make it easy to create moving faces from just a single photo

Long before “fake news” gained popularity in mainstream media, there was DeepFake, media that take a person in an existing image or video and replace them with someone else’s likeness using artificial neural networks. The idea first surfaced three years ago after a team of researchers from Washington University published their academic project work titled: “Synthesizing Obama: Learning Lip Sync from Audio.” The program, which was published in 2017, modifies video footage of former president Barack Obama to depict him mouthing the words contained in a separate audio track.

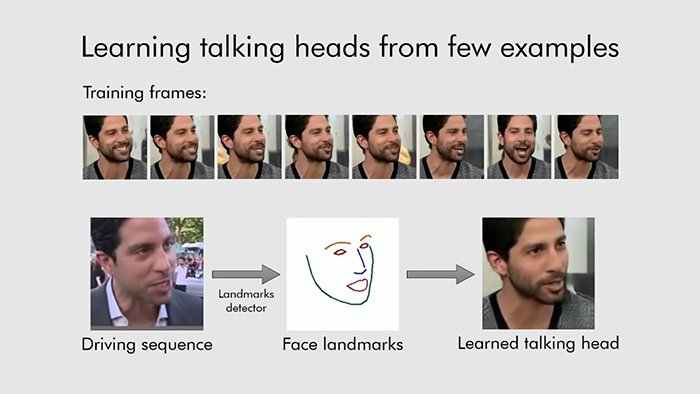

Now, Samsung is taking DeepFake to the next level with the creation of DeepFake video. They are much more sophisticated than the DeepFake audio created three years ago. Last year, machine learning researchers from Samsung AI Center and the Skolkovo Institute of Science and Technology in Russia, developed a new DeepFake AI system that allows anyone to create videos, called DeepFake videos, from a single picture using very few input photos, even with single photo.

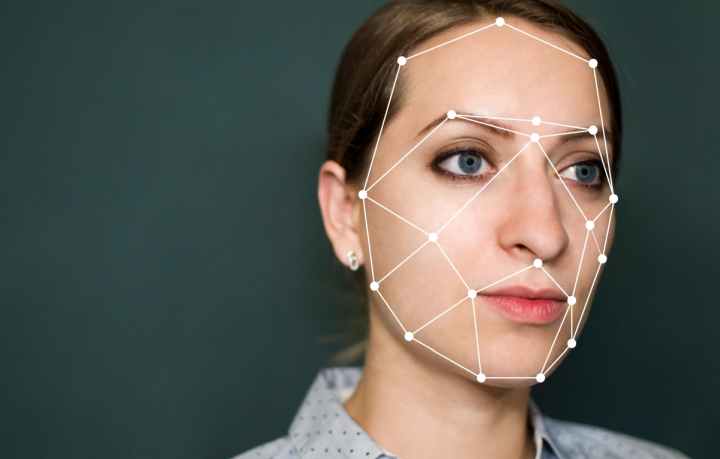

(Samsung AI Center)

The new algorithms work best with a variety of sample images taken at different angles – but they can be quite effective with just one picture to work from, even a painting. The result of their discovery was published in a research paper titled: “Few-Shot Adversarial Learning of Realistic Neural Talking Head Models.” Unlike the previous deep fake videos, which needs multiple pictures to animate the picture, Samsung’s AI would only require a single picture to animate it, making it more efficient than other platforms. The research work has been published on the pre-print server at arXiv.org.

Below is a video of how Samsung AI technology is reshaping the future by making video our of any picture.

Since the publication of the research, many deep fake apps have been launched. One of these apps, FakeApp, makes it possible for anyone to create deep fake video. DeepFakes have generated attention because of potential uses in politics, financial frauds, celebrity pornographic videos, fake news, and hoaxes. Many in the industry and government are now expressing concerns and asking government to issue regulations to limit their use and future consequences.

It’s also getting harder to spot a deep fake video. Below is a video from Bloomberg QuickTake explaining how good deep fakes have gotten in the last few months, and what’s being done to counter them.