Hands-free communication without saying a word: Facebook is on the verge of making a brain-reading computer a reality

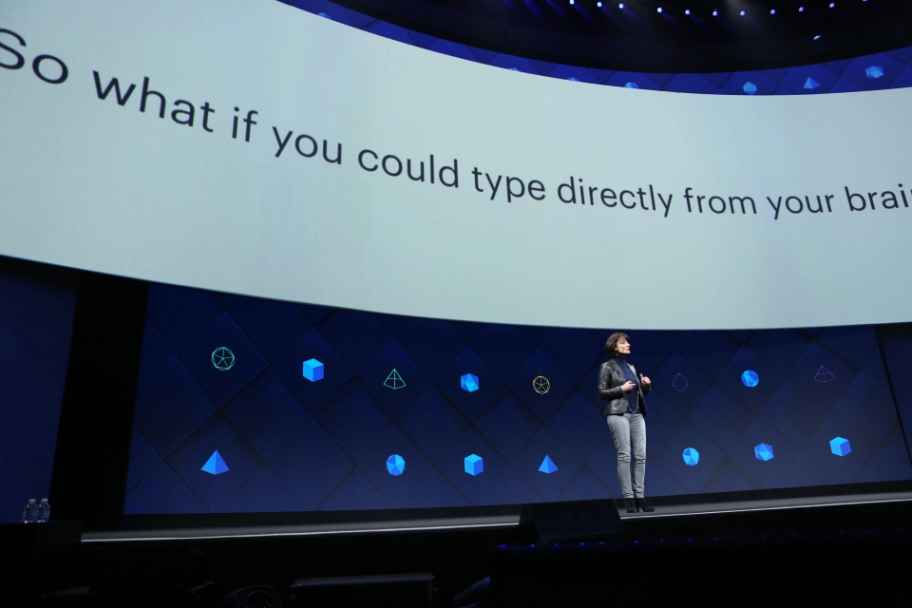

So what if you could type directly from your brain? That’s the question Facebook is asking. The social giant is on the verge of changing the way we interact with computer. The idea is to let people type with their brains. Specifically, Facebook’s goal is to let you create a silent speech system capable of typing 100 words per minute straight from your brain – that’s five times faster than you can type on a smartphone today.

“This isn’t about decoding your random thoughts. Think of it like this: You take many photos and choose to share only some of them. Similarly, you have many thoughts and choose to share only some of them,” Facebook explained. “It is more about decoding the words you’ve already decided to share by sending them to the speech center of your brain. It’s a way to communicate with the speed and flexibility of your voice and the privacy of text. We want to do this with non-invasive, wearable sensors that can be manufactured at scale,” Facebook said.

Facebook first introduced its brain-computer interface (BCI) program at its 2017 Developer Conference. Yesterday, Facebook said it’s getting closer to making its brain-reading computer a reality. In early tests, Facebook was able to use a brain-computer interface to decode speech directly from the human brain onto a screen. The early results provide a glimpse into how the technology could be used in augmented reality glasses.

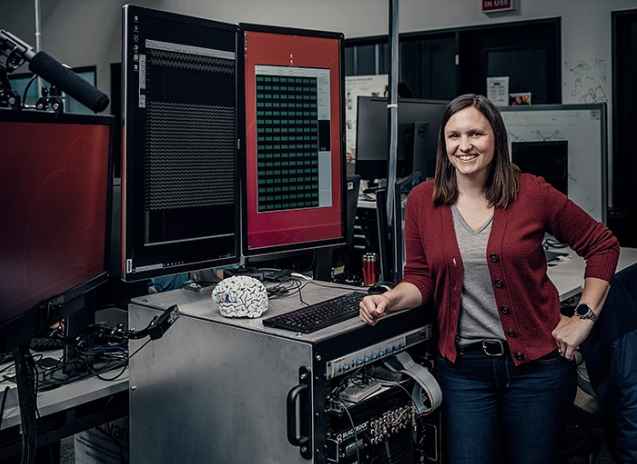

Facebook Reality Labs (FRL) is a division of Facebook that brings together a world-class team of researchers, developers, and engineers to create the future of virtual and augmented reality. Emily Mugler and Mark Cheville members of the FRL. Mugler is an engineer on the BCI team at Facebook Reality Labs. She joined Facebook after learning it was pursuing a non-invasive, wearable BCI device for speech during a 2017 F8 talk.

Emily Mugler, an engineer on the BCI team at Facebook Reality Labs.

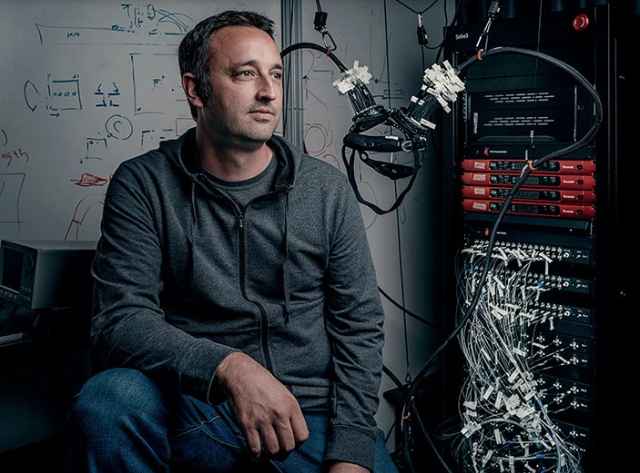

Mark Chevillet is the director of the brain-computer interface (BCI) research program at Facebook Reality Labs. The aim of the program he helped to build is to develop a non-invasive, silent speech interface that will let people type just by imagining the words they want to say – a technology that could one day be a powerful input for all-day wearable AR glasses.

Mark Chevillet,the director of the brain-computer interface (BCI) research program at Facebook Reality Labs.

FRL has been working with researchers from the University of California, San Francisco (UCSF), to develop a noninvasive wearable device that allows people to type using their thoughts. Yesterday, in the journal Nature Communications, Chang and David Moses, a postdoctoral scholar in Chang’s lab at UCSF, published the results of their study in an article titled: “Real-time decoding of question-and-answer speech dialogue using human cortical activity.” The article showed how the researchers were able to use the brain-computer interface to decode speech directly from the human brain onto a screen. The results of the study demonstrated that brain activity recorded while people speak could be used to almost instantly decode what they were saying into text on a computer screen.

While previous decoding work has been done offline, the key contribution in this paper is that the UCSF team was able to decode a small set of full, spoken words and phrases from brain activity in real time — a first in the field of BCI research. The researchers emphasize that their algorithm is so far only capable of recognizing a small set of words and phrases, but ongoing work aims to translate much larger vocabularies with dramatically lower error rates.

As part the research, three human epilepsy patients undergoing treatment at the UCSF Medical Center participated in this study. For the clinical purpose of localizing seizure foci, ECoG arrays were surgically implanted on the cortical surface of one hemisphere for each participant. All participants were right-handed with left hemisphere language dominance determined by their clinicians. The researchers say the findings could help develop a communication device for patients who can no longer speak after severe brain injuries, including brainstem stroke and spinal cord injury.

“Today we’re sharing an update on our work to build a non-invasive wearable device that lets people type just by imagining what they want to say,” Andrew “Boz” Bosworth, Facebook vice president of AR/VR, said in a tweet. “Our progress shows real potential in how future inputs and interactions with AR glasses could one day look.”

https://twitter.com/boztank/status/1156228719129665539