Google announced Open Images V4, the largest existing dataset with object location annotations, along with the Open Images Challenge

Advances in machine learning and artificial intelligence have accelerated discovery and achievement in Computer Vision. This results in systems that can automatically caption images to apps which can then create natural language replies in response to shared photos. Much of this progress can be attributed to publicly available image datasets, such as COCO and ImageNet for supervised learning, and YFCC100M for unsupervised learning. Back in 2016, Google introduced Open Images, a dataset consisting of ~9 million URLs to images that have been annotated with labels spanning over 6000 categories. As part of the project, Google labels covered more real-life entities than the 1000 ImageNet classes, with enough images to train a deep neural network from scratch. The images are later listed as having a Creative Commons Attribution license.

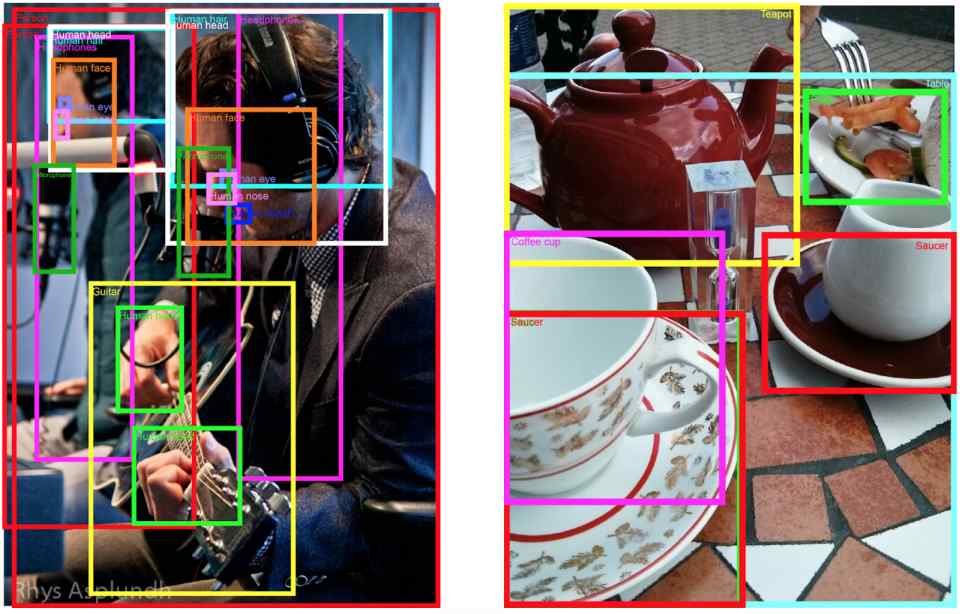

Since its initial release, Google team has been hard to update and refine the dataset, in order to provide a useful resource for the computer vision community to develop new models. Yesterday, Google announced Open Images V4. The version contains 15.4 million bounding-boxes for 600 categories on 1.9 million images, making it the largest existing dataset with object location annotations. The boxes have been largely manually drawn by professional annotators to ensure accuracy and consistency. The images are very diverse and often contain complex scenes with several objects (8 per image on average; visualizer).

In addition to the release announcement, Google also introduced the Open Images Challenge, a new object detection challenge to be held at the 2018 European Conference on Computer Vision (ECCV 2018). The Open Images Challenge follows in the tradition of PASCAL VOC, ImageNet and COCO, but at an unprecedented scale. According to Google, this challenge is unique in several ways:

- 12.2M bounding-box annotations for 500 categories on 1.7M training images,

- A broader range of categories than previous detection challenges, including new objects such as “fedora” and “snowman.”

- In addition to the object detection main track, the challenge includes a Visual Relationship Detection track, on detecting pairs of objects in particular relations, e.g. “woman playing guitar.”

“The training set is available now. A test set of 100k images will be released on July 1st 2018 by Kaggle. Deadline for submission of results is on September 1st 2018. We hope that the very large training set will stimulate research into more sophisticated detection models that will exceed current state-of-the-art performance, and that the 500 categories will enable a more precise assessment of where different detectors perform best. Furthermore, having a large set of images with many objects annotated enables to explore Visual Relationship Detection, which is a hot emerging topic with a growing sub-community,” Google said in a blog statement on its website.

In addition to the above, Open Images V4 also contains 30.1 million human-verified image-level labels for 19,794 categories, which are not part of the Challenge. The dataset includes 5.5M image-level labels generated by tens of thousands of users from all over the world at crowdsource.google.com.