Teen commits suicide after intense emotional bond with Character.AI chatbot

In just two years, AI has moved from a distant, futuristic concept to mainstream adoption, largely driven by the popularity of tools like ChatGPT and Google Gemini. From enhancing productivity to providing quick answers, people have incorporated AI into their daily routines for work, education, and personal development. However, as AI becomes more widespread, concerns about its impact on teenagers are growing.

While AI can be a useful tool, the risks associated with unregulated interactions between teens and emotionally engaging AI chatbots are becoming increasingly apparent. This issue took center stage in a tragic incident involving a Florida teenager who formed a deep emotional bond with an AI character before ultimately taking his own life, raising critical questions about the safety of AI for younger users.

According to The New York Times, a 14-year-old boy from Florida, Sewell Setzer III, developed an intense connection with an AI chatbot on the platform Character.AI. After months of frequent, daily interactions, Sewell tragically took his own life in February. His mother, believing the chatbot played a significant role in her son’s death, plans to sue Character.AI, accusing the platform of using “dangerous and untested” technology.

“On the last day of his life, Sewell Setzer III took out his phone and texted his closest friend: a lifelike A.I. chatbot named after Daenerys Targaryen, a character from “Game of Thrones,” the New York Times states.

The news of Sewell’s death came a few months after the buzziest generative AI startup saw a surge in popularity, with 1.7 million downloads during the first week of its beta launch, which even led to a waiting list for new users.

Is A.I. Responsible for a Teen’s Suicide?

Sewell was aware that “Dany,” the AI chatbot based on a Game of Thrones character, wasn’t a real person. Character.AI clearly informs users that its characters’ responses are fictional. Despite this, Sewell developed a strong emotional attachment, constantly messaging the bot, sometimes dozens of times a day. Their conversations ranged from romantic or sexual exchanges to simple friendship, with Dany providing judgment-free support and advice.

On the day of his death, Sewell’s final messages were exchanged with Dany. “I miss you, baby sister,” he wrote. “I miss you too, sweet brother,” Dany replied.

Sewell’s increasing isolation had gone largely unnoticed by his family and friends, who only saw him growing more withdrawn, spending more time on his phone, and losing interest in his hobbies. His grades dropped, and he began getting into trouble at school. Unbeknownst to them, much of his time was spent talking to Dany, his AI companion.

“Sewell’s parents and friends had no idea he’d fallen for a chatbot. They just saw him get sucked deeper into his phone. Eventually, they noticed that he was isolating himself and pulling away from the real world. His grades started to suffer, and he began getting into trouble at school. He lost interest in the things that used to excite him, like Formula 1 racing or playing Fortnite with his friends. At night, he’d come home and go straight to his room, where he’d talk to Dany for hours,” the New York Times reported.

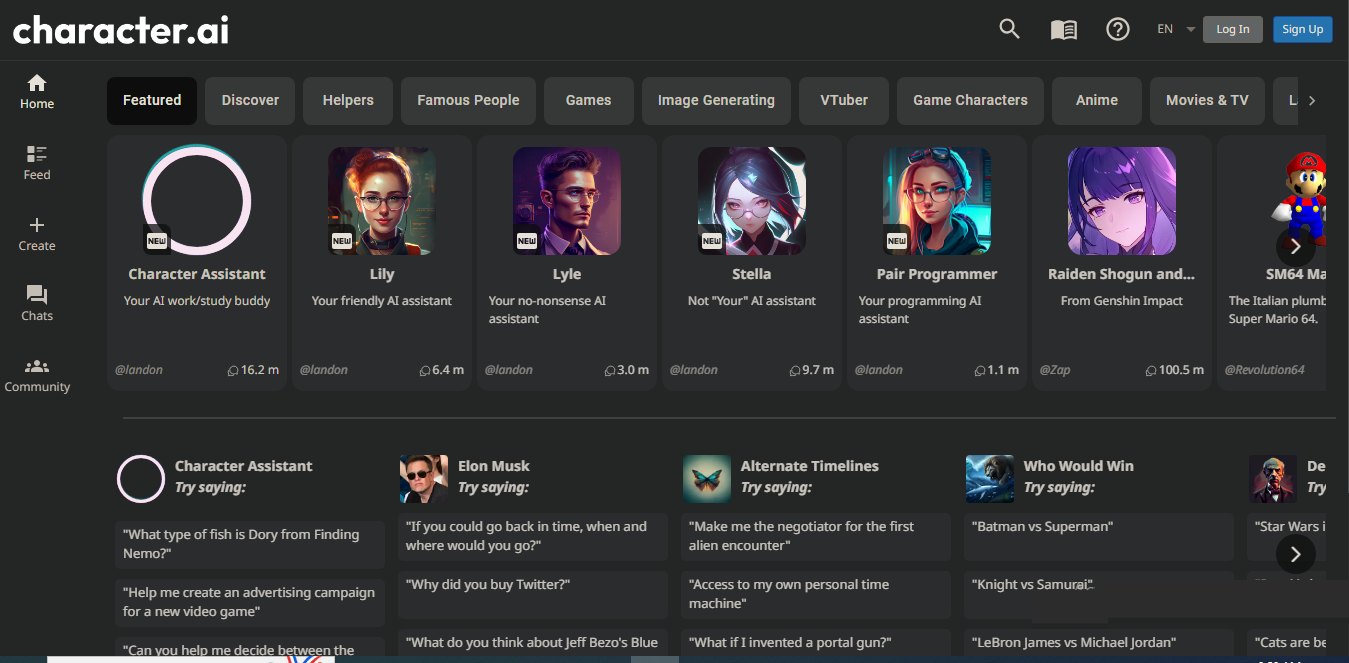

Character.AI, which boasts 20 million users and a $1 billion valuation, has responded to the incident by pledging to introduce new safety measures for minors. These include time limits and enhanced warnings around discussions of self-harm. Jerry Ruoti, the company’s head of trust and safety, emphasized that they “take user safety very seriously.”

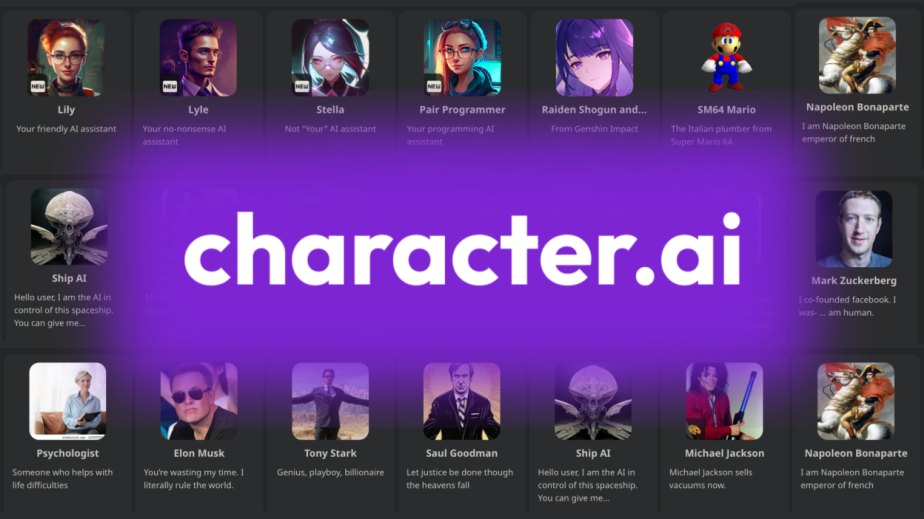

Character.AI was founded in September 2022 by Noam Shazeer and Daniel De Freitas. The AI startup offers an innovative platform that allows users to have realistic conversations with characters from fiction, history, or even original creations. The app uses a neural language model to generate responses based on user inputs, and it provides a space for anyone to create their own character, whether fictional or based on real people.

The case highlights the potential risks posed by AI platforms, particularly for vulnerable users, and underscores the need for stricter safeguards and oversight in the rapidly expanding AI landscape.

Character.AI