Nvidia just commoditized the voice AI stack with PersonaPlex-7B

NVIDIA just made every voice AI API a commodity with the launch of the new conversational AI model, PersonaPlex-7B. The open-source full-duplex model collapses ASR, LLM, and TTS into a single 7B system—shifting voice AI margins from APIs to GPUs.

On January 15, Nvidia quietly released something that may prove more disruptive than another larger language model: PersonaPlex-7B, a real-time speech-to-speech conversational system that doesn’t just improve voice AI — it collapses the traditional architecture behind it.

Unlike traditional voice stacks that stitch together automatic speech recognition (ASR), a language model (LLM), and text-to-speech (TTS), PersonaPlex collapses the entire pipeline into a single full-duplex model. It listens and speaks at the same time. No handoffs. No stitched latency. No cascading delays.

And best of all, PersonaPlex-7B is fully open-source.

That combination changes the strategic calculus for voice AI startups.

The Pipeline Voice AI Was Built On

For years, voice AI has been built as a pipeline.

User speech flows into an automatic speech recognition model. The transcribed text is sent to a language model. The output is then routed through a text-to-speech system. Three models. Three inference passes. Three layers of latency. Three separate billing surfaces.

ASR → LLM → TTS.

It works. But it’s stitched together. And at scale, it’s expensive. Instead of cascading systems, Nvidia delivers a single 7-billion-parameter full-duplex transformer that:

-

Runs on a single A100

-

Ships with open weights under a permissive commercial license

-

Delivers 0.170-second latency for turn-taking

-

Handles interruptions in 0.240 seconds

PersonaPlex removes that structure entirely. Instead of passing audio between disconnected systems, Nvidia built a single 7-billion-parameter full-duplex transformer that listens and speaks simultaneously. Incoming audio is encoded with a neural codec and streamed directly into the model. As the user speaks, PersonaPlex updates its internal state and begins generating spoken responses, predicting both text and audio tokens in an autoregressive manner.

There’s no handoff between models. No sequential processing chain. Listening and speaking occur concurrently in a dual-stream configuration.

The result feels less like a voice assistant and more like a conversation.

Turn-taking latency measures around 0.170 seconds. Interruptions register in approximately 0.240 seconds. The model can overlap speech, handle barge-ins, and produce contextual backchannels like “uh-huh” or “okay” without breaking rhythm.

Instead of waiting for silence before responding, it behaves as if it understands timing. Below is an audio of PersonaPlex and Rajarshi Roy sharing jokes. Click on the link to listen.

Breaking the Trade-Off: Naturalness vs Persona Control

Full-duplex systems aren’t new. Kyutai’s Moshi showed that simultaneous listening and speaking dramatically improves conversational flow.

The trade-off was flexibility. You often have one fixed voice and limited behavioral steering.

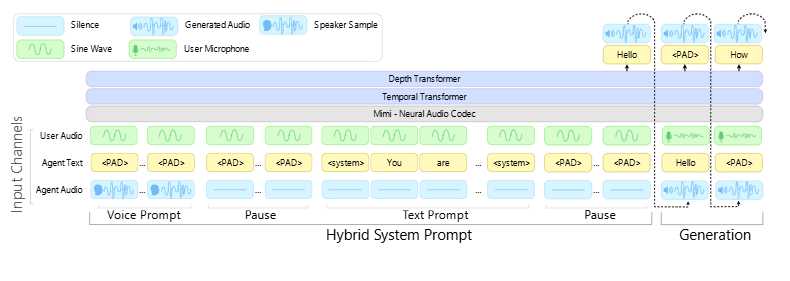

PersonaPlex introduces hybrid prompting.

Before a conversation begins, the system is conditioned on two inputs:

-

A voice prompt — audio tokens defining tone, accent, and speaking style

-

A text prompt — natural language describing role, background, and scenario context

Together, those prompts define the model’s conversational identity.

You can instruct it to be a wise and friendly teacher. A banking customer service agent verifying a suspicious transaction. A medical receptionist is collecting intake information. Even an astronaut managing a reactor meltdown on a Mars mission.

In each case, the model maintains a consistent persona while handling interruptions and shifts in emotional tone.

For the first time, developers don’t have to choose between conversational realism and persona control.

They get both.

Under the Hood: Architecture and Training

PersonaPlex is built on the Moshi architecture and powered by a language backbone called Helium. Audio is processed through the Mimi neural codec encoder and decoder stack, operating at 24kHz. The system runs in a dual-stream configuration that enables simultaneous listening and speaking.

PersonaPlex defines conversational behavior through two coordinated inputs. A voice prompt — an audio embedding that captures tone, accent, and prosody — shapes how the system sounds. A text prompt — written in natural language — defines the role, background, and situational context. Processed together, these signals allow the model to maintain a coherent and consistent persona throughout the interaction.

PersonPlex Hybrid Prompting Architecture. (Credit: Nvidia)

Training posed a unique challenge. Natural conversation requires overlapping speech, interruptions, pauses, and emotional cues — data that is scarce and difficult to structure.

Nvidia blended two sources:

-

7,303 real human conversations (1,217 hours) from the Fisher English corpus

-

Over 140,000 synthetic assistant and customer service dialogues generated using large language models and TTS systems

The synthetic data enforces task-following behavior. The real recordings supply natural speech patterns that synthetic systems struggle to replicate convincingly.

The final model disentangles conversational richness from task adherence, retaining the broad generalization capability of its pretrained foundation.

In Nvidia’s internal benchmarks, PersonaPlex scored 2.95 on dialog naturalness compared to Gemini’s 2.80 mean opinion score and handled interruptions better than every commercial system tested.

And it runs on a single A100.

The weights are released under a permissive commercial license.

NVIDIA Made Every Voice AI API a Commodity with PersonaPlex-7B

The Economic Shift Beneath the Model

This is where the story changes.

Today, most voice startups rely on per-minute API billing. OpenAI’s Realtime API charges $0.06 per minute for input and $0.24 per minute for output. Gemini Live bills roughly 25 tokens per second of audio.

At scale, those costs become structural.

What PersonaPlex suggests is that the core capability — natural, low-latency, persona-controlled voice interaction — may no longer require a proprietary API.

It may require a GPU.

Nvidia doesn’t need to monetize PersonaPlex directly. They monetize the infrastructure. Every startup that self-hosts the model instead of paying per-minute fees becomes another GPU customer. Every enterprise that internalizes voice inference becomes another hardware contract.

PersonaPlex was downloaded more than 330,000 times in its first month.

That isn’t just adoption.

It’s ecosystem positioning.

Where the Voice AI Margin Is Moving

For years, voice AI margins lived at the application layer.

Closed APIs.

Per-minute billing.

Proprietary ecosystems.

If high-quality conversational speech becomes an open, deployable capability, that margin doesn’t vanish.

It migrates.

It moves downward — toward hardware efficiency, GPU optimization, deployment architecture, and compute ownership.

Nvidia profits whether OpenAI wins, Gemini wins, or startups build their own stacks.

That’s the advantage of owning the compute layer.

The Bigger Shift

The release of PersonaPlex-7B isn’t just about conversational quality. It’s about control over where value accrues. When foundational capabilities become open and affordable, profit doesn’t disappear — it migrates. In voice AI, that migration may already be underway.

APIs will still exist. Voice startups will still charge per minute. But once a 7B open model running on a single GPU can match or exceed commercial systems, pricing power weakens. The center of gravity shifts.

Nvidia didn’t just release a model.

It changed the negotiating leverage of the entire voice AI market.

Watch the video below to see PersonaPlex in action.