Amazon’s hidden data centers: AWS quietly operates more than 900 facilities across 50+ countries

For years, the general sense of AWS’s physical scale centered on a few widely known regions. Huge campuses in Northern Virginia, sprawling complexes in Oregon, and a handful of high-profile projects overseas shaped the public perception. New internal documents tell a very different story. AWS is operating more than 900 data center facilities across more than 50 countries, creating a global web of infrastructure that is far broader and more deeply embedded than most people realized.

This isn’t just a surprising statistic. It’s the physical foundation behind the AI surge, the cloud services powering countless companies, and the unseen network supporting everyday digital activity.

“Amazon.com Inc.’s data center operation is far larger than commonly understood, spanning more than 900 facilities across over 50 countries,” Bloomberg reported, citing documents it reviewed and the investigative site SourceMaterial.

Amazon’s Hidden Data Centers: Inside AWS’s 900+ Facilities Spanning Over 50 Countries

AWS has become synonymous with massive, purpose-built data centers. Regions like Northern Virginia and Boardman, Oregon, are filled with company-owned or long-term leased facilities built for extreme density and heavy workloads. These sites are real, and they are enormous.

The newly surfaced documents reveal that those high-profile campuses represent only a slice of Amazon’s true footprint. A large and growing share of AWS capacity lives inside colocation facilities, where AWS rents space in data centers owned and operated by third parties. As of early 2024, there were more than 440 of these sites in active operation, accounting for roughly 20% of AWS’s total computing capacity.

Some of these locations contain only a few racks of servers. Others fill entire buildings. In cities such as Frankfurt and Tokyo, AWS occupies enough space to support dense regional demand without waiting years for new construction to finish. This approach allows AWS to move faster, stay closer to customers, and avoid the delays that come with permitting, zoning, and large-scale builds.

The Unseen Scale of Amazon Data Centers: Beyond the Mega-Campuses

Partners range from global operators like Equinix and NTT to regional firms such as Markley Group in Boston. Together, these sites feed into 38 geographic regions, each supported by at least three Availability Zones. Those zones remain isolated from one another for resilience, while still being close enough to provide low-latency performance.

AWS has also extended its presence through Local Zones and Wavelength Zones, pushing compute into major metro areas and directly into 5G networks. This allows applications that demand ultra-low latency, such as real-time gaming, autonomous systems, and AI inference at the edge, to work without the delay of cross-country data transfers.

What emerges from all of this is a sprawling, adaptable, and difficult-to-map infrastructure giant quietly operating at a global scale.

AI’s insatiable appetite and the race for compute

The timing of this disclosure is revealing. AI workloads are consuming more compute than anything that came before them. Training large models requires massive clusters of GPUs and specialized chips running around the clock. Even individual AI queries can draw meaningful amounts of energy. Analysts now expect data center power consumption to jump sharply over the next few years, driven largely by AI.

AWS is positioned at the center of that demand. Services such as Bedrock and its lineup of custom AI chips are driving huge revenue, and the company is spending heavily to keep up. In the first half of 2025 alone, AWS committed more than $50 billion toward new projects in places like Mississippi and Japan. Microsoft and Google have also announced aggressive expansion, but AWS’s heavy use of colocation gives it a quieter path to new capacity.

That “shadow” expansion makes it harder for competitors to track exactly how much infrastructure is coming online and where global supply is being positioned. It also strengthens AWS’s ability to respond quickly as AI adoption spreads across industries, from healthcare and finance to logistics and robotics.

That speed and scale come with trade-offs that are increasingly hard to ignore.

Environmental strain and the hidden cost of scale

For all its ingenuity, AWS’s vast footprint comes with real environmental pressure. Large data centers can consume as much electricity as small cities, with a single major facility at times matching the draw of more than 100,000 households. AI workloads make the strain heavier, and not just in the data halls themselves. A significant share of water tied to cloud computing actually comes from power generation linked to those facilities, in some cases approaching 60% of total consumption.

Nearly half of U.S.-based data centers sit in regions already facing water stress. Many rely on evaporative cooling, which pulls billions of liters of water each year to keep servers from overheating. That reality has raised tension in communities where farms, homes, and businesses are already competing for the same limited supply.

AWS points to meaningful efficiency gains in response. The company reports a global Power Usage Effectiveness of 1.15 for 2024, meaning just 15% of energy goes to overhead like cooling, with the rest directed to computing. Its custom-built chips, including Trainium and Inferentia, are engineered to cut power demand for AI workloads by as much as 81% compared to typical on-premises systems.

On the waterfront, Amazon has pledged to become “water positive” by 2030, aiming to replenish more water than it consumes. That effort already includes projects such as wetland restoration in Chile and the use of treated wastewater for cooling in multiple U.S. locations. In 2023 alone, AWS reported returning roughly 3.5 billion liters of water through conservation work like leak detection and modernized irrigation, moving past the halfway point toward its stated goal. Evaporative cooling can also reduce peak energy demand by 25–35% in hotter climates, easing pressure on local power grids during periods of extreme heat.

Critics point to blind spots that still deserve attention. Indirect water use from thermoelectric plants can multiply a site’s true water footprint by three to ten times, and publicly reported Water Usage Effectiveness figures don’t fully reflect that hidden draw. In arid locations such as Arizona and Chile, new facilities have added stress to already limited supplies, triggering community pushback and new local rules like Santa Clara’s requirement for carbon-free energy sourcing. On a global scale, the continued rise of data centers could intensify water scarcity for as many as 780 million people, strengthening calls for circular solutions such as large-scale wastewater reuse, which can reduce overall consumption by up to half.

Even with growing scrutiny, construction continues at a steady pace, moving faster than policy in many regions. That gap between expansion and oversight may become one of the defining challenges of the AI infrastructure era.

Regulatory ripples and competitive shadows

The sheer complexity of AWS’s distributed footprint makes oversight difficult. With facilities scattered across dozens of countries and hundreds of local jurisdictions, it becomes harder for communities and regulators to measure true energy demand, water usage, and environmental impact.

In the United States, some regions have offered major tax incentives to attract new data center projects, only to face backlash once the strain on local grids and water supplies becomes visible. In Europe, lawmakers are pushing for greater transparency around the environmental impact of digital infrastructure, including the AI systems that depend on it.

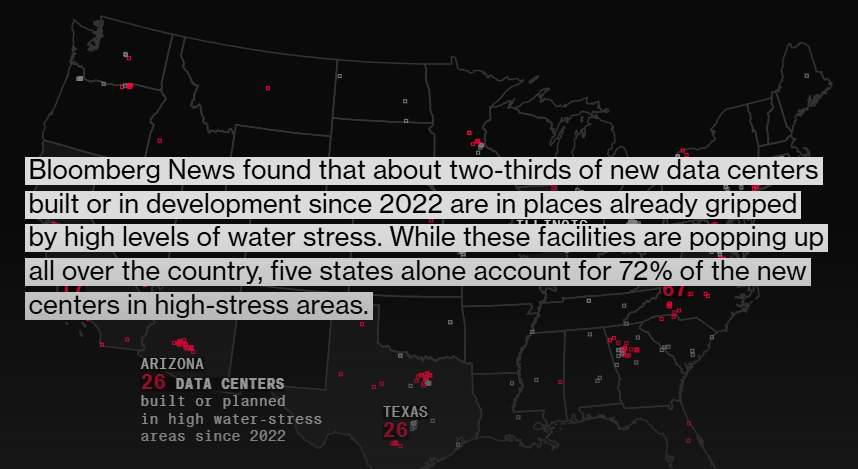

“Bloomberg News found that about two-thirds of new data centers built or in development since 2022 are in places already gripped by high levels of water stress. While these facilities are popping up all over the country, five states alone account for 72% of the new centers in high-stress areas.”

For competitors, the scale of AWS’s network highlights a widening gap. Microsoft and Google have poured billions into owned campuses and headline projects, but AWS’s extensive colocation strategy allows it to add capacity in a quieter, more flexible way. Traditional data center operators increasingly find themselves squeezed as hyperscalers take over more of the market’s available space and power.

AWS spokesperson Aisha Johnson has described the company’s strategy as one that “balances ownership with flexibility” to meet rising demand. That balance is part of what allowed this infrastructure to grow so widely and with so little public visibility.

Why this moment matters

AWS’s 900-plus facilities are more than just assets on a balance sheet. They represent how deeply cloud computing and AI are now woven into physical geography. These sites influence power grids, water systems, zoning decisions, and regional economies. They enable new breakthroughs in medicine, climate science, and automation, even as they raise difficult questions about sustainability and transparency.

The future of AI will not be decided by algorithms alone. It will be shaped by land, energy, water, and the choices made by the companies that control the world’s digital backbone.

The servers that run this new era are spread across deserts, forests, suburbs, and industrial hubs around the globe. Now that the scale is coming into view, the next chapter will be defined by the balance between innovation and responsibility.