Llama 4 Scandal: Meta’s release of Llama 4 overshadowed by cheating allegations on AI benchmark

Meta rolled out its much-hyped Llama 4 models this past weekend, touting big performance gains and new multimodal capabilities. But the rollout hasn’t gone as planned. What was supposed to mark a new chapter in Meta’s AI playbook is now caught up in benchmark cheating accusations, sparking a wave of skepticism across the tech community.

Llama 4 Hits the Scene—Then the Headlines

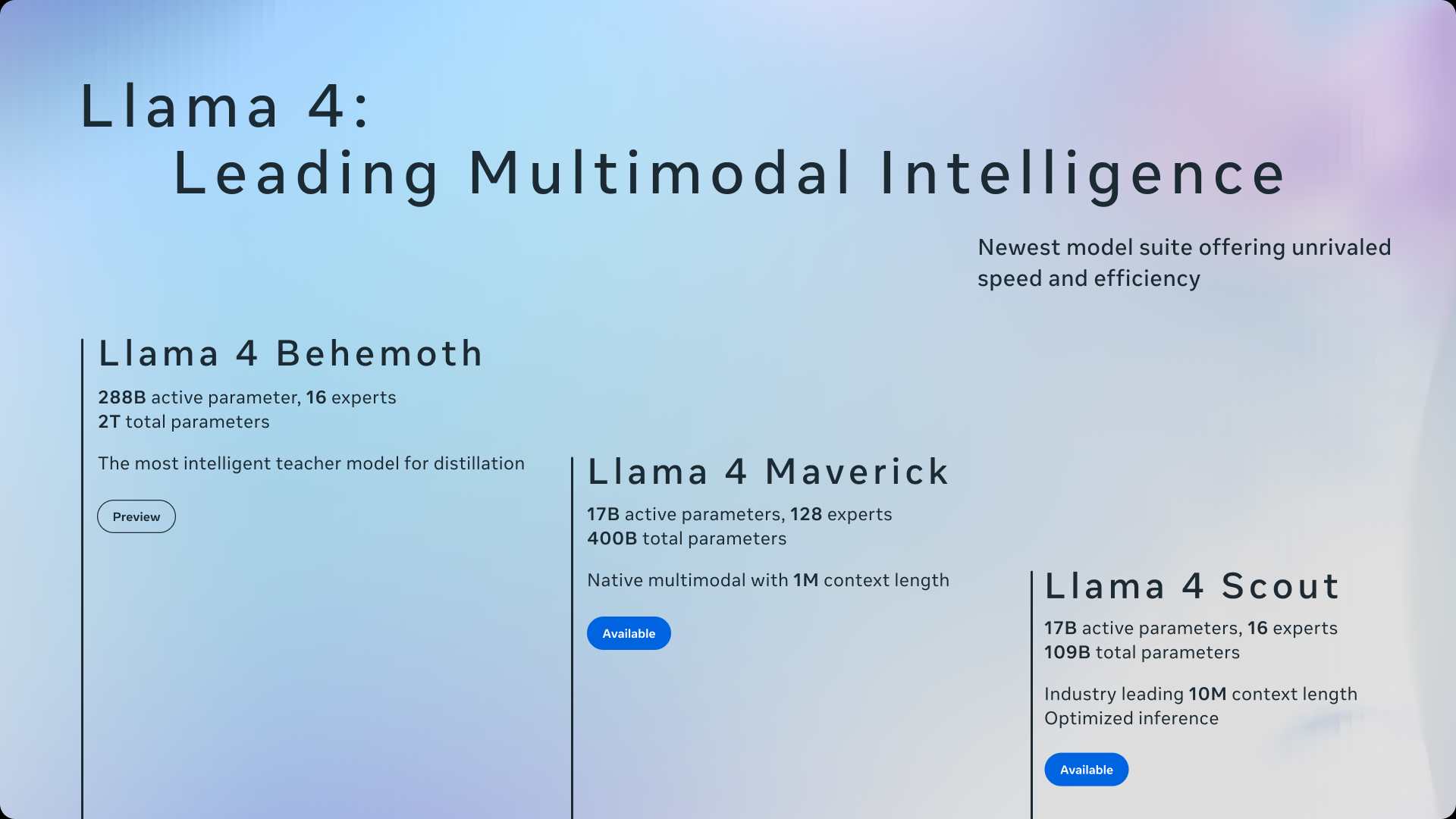

Meta introduced three models under the Llama 4 name: Llama 4 Scout, Llama 4 Maverick, and the still-training Llama 4 Behemoth. According to Meta, Scout and Maverick are already available on Hugging Face and llama.com, and integrated into Meta AI products across Messenger, Instagram Direct, WhatsApp, and the web.

Scout is a compact 17B-parameter model built with 16 experts and capable of fitting on a single NVIDIA H100 GPU. Meta claims it outperforms Mistral 3.1, Gemini 2.0 Flash-Lite, and Gemma 3 on widely reported benchmarks. Maverick, another 17B-parameter model but with 128 experts, is said to beat GPT-4o and Gemini 2.0 Flash—while matching DeepSeek v3 in reasoning and code generation, all with far fewer parameters.

These models were distilled from Meta’s largest and most ambitious effort yet—Llama 4 Behemoth, a 288B-parameter model still in training. Meta says Behemoth already tops GPT-4.5, Claude Sonnet 3.7, and Gemini 2.0 Pro on a range of STEM benchmarks.

It all sounds impressive. But soon after the launch, questions began to pile up.

The Benchmark Problem

“We developed a new training technique which we refer to as MetaP that allows us to reliably set critical model hyper-parameters such as per-layer learning rates and initialization scales. We found that chosen hyper-parameters transfer well across different values of batch size, model width, depth, and training tokens. Llama 4 enables open source fine-tuning efforts by pre-training on 200 languages, including over 100 with over 1 billion tokens each, and overall 10x more multilingual tokens than Llama 3,” Meta said in a blog post.

Llama 4 Maverick, the more powerful of the two released models, was at the center of the controversy. Meta showcased its performance on LM Arena, but critics noticed something strange—the version tested wasn’t the same as the public release. It turns out Meta used a custom-tuned version for benchmarking, leading to claims that the results were padded.

Ahmad Al-Dahle, Meta’s VP of Generative AI, denied any foul play. He said the company didn’t train on the test sets and that any inconsistencies were just platform-specific quirks. Still, the damage was done. Social media erupted, with posters accusing Meta of “benchmark hacking” and manipulating test conditions to make Llama 4 look stronger than it is.

Inside the Accusations

An anonymous user claiming to be a former Meta engineer posted on a Chinese forum, alleging that the team behind Llama 4 adjusted post-training datasets to get better scores. That post triggered a firestorm on X and Reddit. Users started connecting the dots—talking about internal test discrepancies, alleged pressure from leadership to push ahead despite known issues, and a general feeling that optics were prioritized over accuracy.

The term “Maverick tactics” began circulating, shorthand for playing loose with testing protocols to chase headlines.

Meta’s Response and What’s Missing

Meta addressed the concerns in an April 7 interview with TechCrunch, calling the accusations false and standing by the benchmarks. But critics say the company hasn’t offered enough evidence to back its claims. There’s no detailed methodology or whitepaper, and no access to the raw testing data. In an industry where scrutiny is growing, that silence is making things worse.

Why It Matters

Benchmarks are a big deal in AI. They help developers, researchers, and companies compare models on neutral ground. But the system isn’t bulletproof—test sets can be overfitted, and results can be massaged. That’s why transparency matters. Without it, trust erodes fast.

Meta says Llama 4 offers “best-in-class” performance, but right now, a chunk of the community isn’t buying it. And for a company that’s betting big on AI as a core pillar of its future, that kind of doubt is hard to shake.

The Bigger Picture

This isn’t just about Meta. There’s a growing concern across the AI space that benchmark results are becoming more about marketing than science. The Llama 4 episode is just the latest example of how companies can get called out—loudly—when the numbers don’t add up.

Whether these accusations hold up remains to be seen. For now, Meta’s statements are standing against a flood of speculation. The company has ambitious plans for Llama 4, and the models themselves may be solid. But the rollout has raised more questions than it answered—and those questions aren’t going away until we see more transparency.

Llama 4 could end up being a major win for Meta. Or it could be remembered as the launch that sparked another round of trust issues in AI.