Hyperlume raises $12.5M in funding to fix AI data center bottlenecks with optical interconnects

As AI models scale past a trillion parameters and computing clusters expand to hundreds of thousands of GPUs, traditional interconnects are struggling to keep up. The next generation of AI will demand far higher levels of connectivity while cutting power consumption significantly.

Enter Hyperlume, a Canadian tech startup tackling these infrastructure limitations. Hyperlume is replacing aging copper-based solutions in AI data centers and high-performance computing with high-bandwidth, low-latency optical interconnects.

Today, Hyperlume announced it has raised $12.5 million in seed funding to bring its optical interconnect technology to market. The round was led by BDC’s Deep Tech Venture Fund and ArcTern Ventures, with backing from MUUS Climate Partners, SOSV, Intel Capital, and a strategic investment from LG Technology Ventures.

Pushing AI Infrastructure Beyond Its Limits

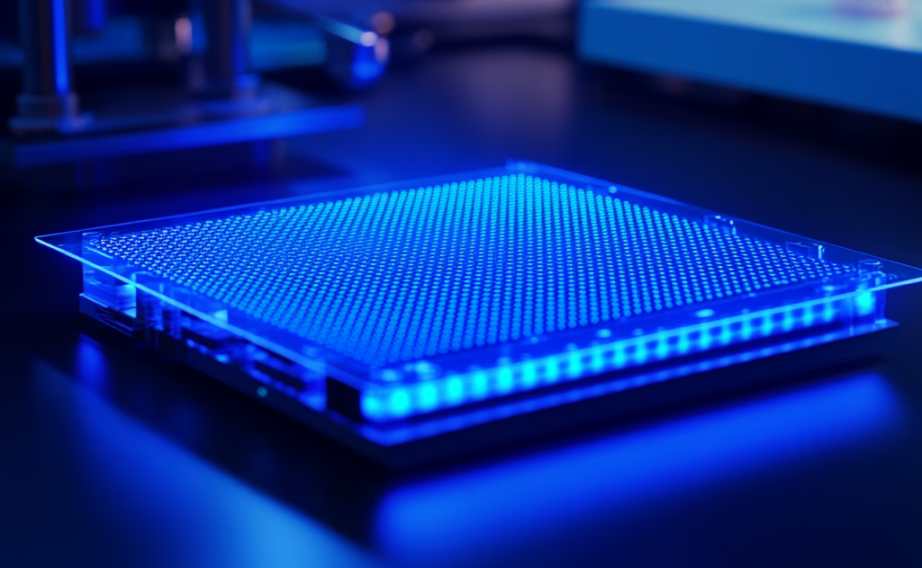

Founded in 2022 by Mohsen Asad and Hossein Fariborzi, Hyperlume is developing high-bandwidth, low-latency optical interconnects designed to replace aging copper-based solutions in AI data centers and high-performance computing environments. Unlike conventional interconnects that face bandwidth bottlenecks and power inefficiencies, Hyperlume’s technology leverages ultra-fast microLEDs and advanced low-power circuitry to move data faster, reduce latency, and drastically cut energy consumption.

By shifting away from traditional electronic interconnects, Hyperlume enables 10X compute performance, 5X power savings, and 4X lower costs compared to existing solutions. This efficiency boost is critical as AI workloads continue to grow exponentially, demanding more from infrastructure while raising concerns about power usage and cooling requirements in massive data centers.

“This funding is a major milestone as we work to transform optical communication and create more efficient computing infrastructure,” said Mohsen Asad, co-founder and CEO of Hyperlume.

Today’s AI models are already pushing the limits of existing interconnects. As the industry scales up, new solutions are needed to handle the exponential growth in data movement while keeping power consumption in check.

Scaling AI Without the Energy Tradeoff

Hyperlume’s optical interconnects offer an alternative designed to handle massive AI workloads efficiently. With this funding, the company plans to expand product development, engineering, and R&D efforts while strengthening partnerships with hyperscalers, chip manufacturers, and AI infrastructure providers. The startup is also gearing up for production to meet demand for 800G and 1.6T interconnects.

“Data center carbon emissions are expected to more than double by 2030, which makes energy-efficient networking solutions critical,” said Murray McCaig, managing partner at ArcTern Ventures. “Hyperlume’s ultra-low-power microLED interconnect technology dramatically reduces energy consumption while delivering top-tier bandwidth performance.”

Intel Capital’s managing director, Srini Ananth, pointed to the increasing power demands of AI and the growing strain on legacy infrastructure. “Hyperlume’s technology eliminates the bottlenecks holding back AI performance and sets a new benchmark for energy efficiency in data centers,” he said.

With AI adoption accelerating, the industry needs breakthroughs that don’t just scale performance but also reduce energy consumption. Hyperlume is betting that optical interconnects will be the key to making AI infrastructure both faster and more sustainable.