Meta launches its Llama 3 open-source LLM on Amazon AWS

Posted On April 18, 2024

0

886 Views

Following the successful launch of ‘Code Llama 70B’ in January, Meta has now released the latest iteration of its open-source LLM powerhouse Llama 3 on the infrastructure of Amazon AWS.

In an email to TechStartups, Amazon revealed that “Meta Llama 3 is now accessible through Amazon SageMaker JumpStart.” This latest version follows in the footsteps of its predecessor, Llama 2, which has been accessible on Amazon SageMaker JumpStart and Amazon Bedrock since the preceding year.

Boasting two parameter sizes — 8B and 70B with an 8k context length — Llama 3 promises to cater to a diverse array of use cases with enhancements in reasoning, code generation, and instruction following. The architecture of Llama 3 adopts a decoder-only transformer design coupled with a fresh tokenizer, resulting in heightened model performance encapsulated within a 128k size.

“Llama 3 comes in two parameter sizes — 8B and 70B with 8k context length — that can support a broad range of use cases with improvements in reasoning, code generation, and instruction following,” Amazon wrote.

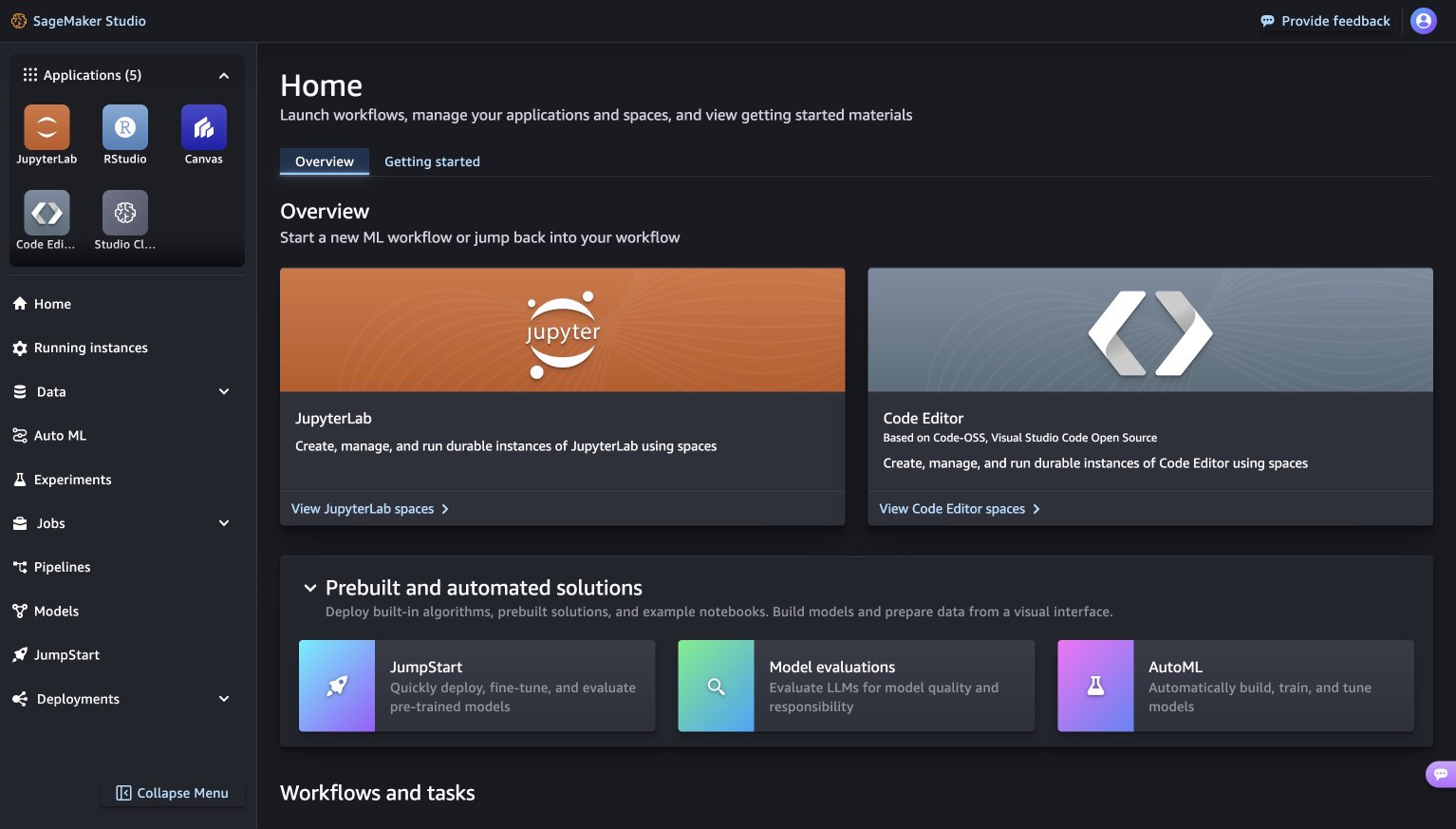

If you’re using SageMaker Studio, you’ll find SageMaker JumpStart right there, offering a bunch of handy stuff like pre-trained models, notebooks, and ready-made solutions. Just head over to the “Prebuilt and automated solutions” section, and you’re all set!

AWS SageMaker JumpStart (Source: Amazon AWS)

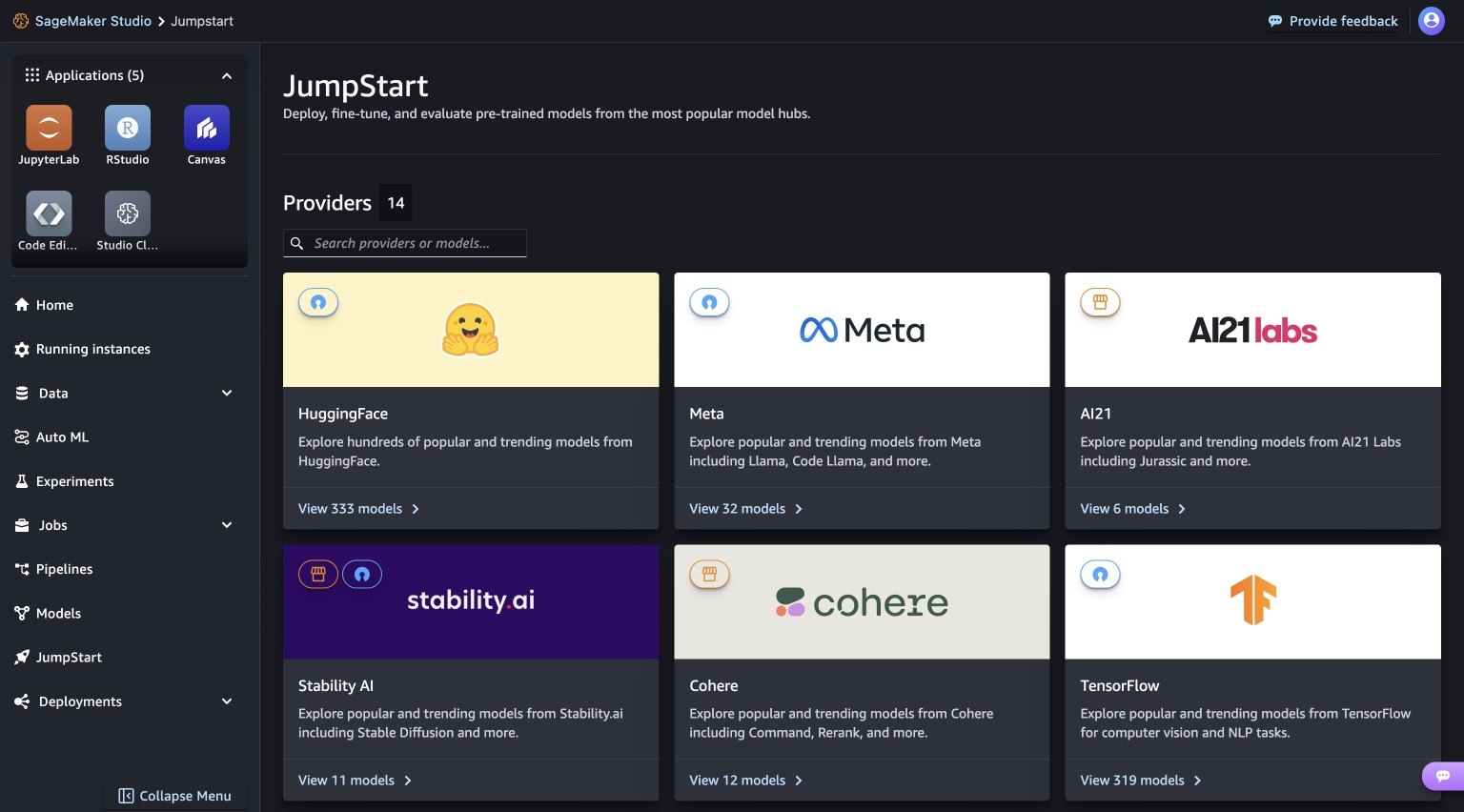

When you land on the SageMaker JumpStart page, it’s pretty straightforward to find different models. Just browse through the hubs, which are basically sections named after the folks who provide the models. For example, if you’re looking for Llama 3 models, head to the Meta hub. And if you don’t spot them right away, no worries—just try updating your SageMaker Studio version by shutting it down and restarting. That should do the trick!

AWS JumpStart (Source: Amazon AWS)

Moreover, Meta has refined post-training procedures to significantly curtail false refusal rates, enhance alignment, and augment diversity in model responses. Users are now empowered to harness the combined advantages of Llama 3’s prowess and MLOps controls by leveraging Amazon SageMaker features like SageMaker Pipelines, SageMaker Debugger, or container logs. Additionally, the model will be deployed within the secure confines of AWS under their VPC controls, fortifying data security measures.

At present, AWS stands as the sole cloud provider furnishing customers with access to the most sought-after and cutting-edge foundation models. Amazon Bedrock consistently takes the lead in making these popular models readily available:

Functioning as a fully managed service, Amazon Bedrock serves as the go-to destination for an extensive selection of high-performance foundation models from industry titans such as AI21 Labs, Amazon, Anthropic, Cohere, Meta, Mistral AI, and Stability AI, all accessible through a single API. Moreover, it provides a comprehensive suite of capabilities encompassing Agents, Guardrails, Knowledge Bases, and Model Evaluation, empowering organizations to craft generative AI applications with an emphasis on security, privacy, and responsible AI. With tens of thousands of organizations worldwide relying on Amazon Bedrock, it has solidified its status as a pivotal player in the realm of generative AI applications.