Top Tech News Today, January 15, 2026

Technology News Today – Your Daily Briefing on the AI, Big Tech, and Startup Shifts Reshaping Markets

It’s Thursday, January 15, 2026, and today’s tech landscape shows just how fast the stakes are rising across AI, infrastructure, and global regulation. From OpenAI locking in utility-scale compute to governments reshaping chip trade and data-center policy, the industry is moving deeper into an era where software power is defined by hardware, energy, and geopolitics.

Enterprise AI funding remains strong, cybersecurity unicorns are emerging in Europe, and regulators are tightening the screws on data protection and deepfake abuse. At the same time, creators and platforms are drawing new lines around generative content, signaling a cultural shift in how AI is accepted — and restricted.

Here are the top 15 technology news stories shaping the global ecosystem today.

Technology News Today

OpenAI inks $10B+ AI compute deal with Cerebras as infrastructure race hits utility-scale

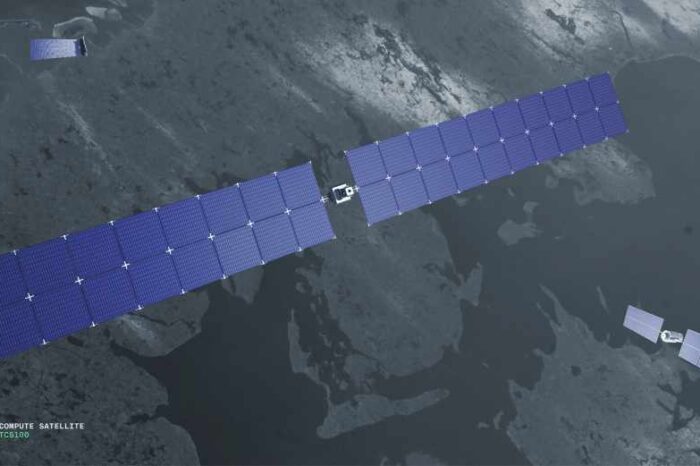

OpenAI has signed a multi-year agreement to tap Cerebras Systems for roughly 750 megawatts of computing capacity — a utility-scale figure that underlines how AI has moved from “cloud spend” to “industrial infrastructure.” The deal, reported late Wednesday, is designed to secure a predictable supply of compute as model training and high-volume inference continue to strain traditional GPU availability.

Why it matters is simple: the next competitive edge is increasingly determined by who can lock in power, chips, and deployment capacity faster than rivals. Large buyers are now structuring compute like long-term energy offtake agreements, and that shift favors companies that can finance and operate at a massive scale. It also adds pressure on regulators and local communities to decide how quickly they will permit new data center buildouts, grid upgrades, and water use—and on what terms.

Why It Matters: AI leadership is becoming a supply-chain and energy problem as much as a software problem.

Source: Bloomberg.

The US sets 25% tariff on select advanced chips tied to Nvidia China shipment framework

The US imposed a 25% tariff on imports of certain advanced semiconductors in a move Bloomberg describes as part of a broader arrangement that allows Nvidia’s Taiwan-made H200 AI processors to ship to China. Under the order, the duty is collected as chips enter the US before they are ultimately shipped onward to foreign customers, including in China.

The policy is notable for blending trade tools with export-control politics. It signals that Washington is still willing to allow some high-end AI hardware to flow, but is seeking economic or strategic concessions in the process. For Nvidia and its ecosystem, it introduces new friction into global routing and compliance, and could influence where companies stage inventory, how they structure contracts, and how quickly Chinese buyers can actually receive approved parts. More broadly, it underscores how AI chip supply has become a geopolitical lever rather than just a commercial product category.

Why It Matters: AI chips are now directly shaped by trade policy — altering costs, logistics, and global access.

Source: Bloomberg.

AI-linked tech selloff hits markets as investors reassess valuations and policy risk

US markets saw renewed pressure on tech-heavy indexes, with Wall Street tracking a fresh wave of investor caution centered on AI-driven valuations, regulation risk, and the durability of mega-cap spending plans. The Wall Street Journal’s market coverage framed the move as part of a broader “AI selloff” narrative—a reminder that even in an infrastructure boom, public markets can pivot quickly when expectations outpace near-term earnings.

The shift matters because it affects the downstream financing environment. When public multiples compress, late-stage private rounds often reprice, IPO windows narrow, and growth-stage startups face stricter efficiency demands. The near-term implication: greater scrutiny of AI companies that promise large enterprise returns without clear deployment traction, and higher standards for “platform” narratives that depend on multi-year payoffs. In the long term, the market’s message is that AI spending is not immune to macroeconomic forces — and that policy uncertainty around chips, data centers, and AI safety can translate into real volatility.

Why It Matters: Public-market sentiment can tighten startup capital and reshape valuation expectations fast.

Source: The Wall Street Journal.

Fusion Startup Type One Energy raises $87M as investors double down on grid-scale moonshots

Fusion startup Type One Energy, backed by Bill Gates, raised $87 million as it gears up for a larger fundraise. The round signals that even in a market obsessed with near-term AI revenue, deep-tech investors are still placing meaningful bets on energy breakthroughs that could redefine the cost curve for electricity over the next decade.

Fusion has long lived in the “too far out” category for most funds, but the AI era is changing the narrative. Data centers and electrification are pushing power demand into the spotlight, and governments are increasingly anxious about grid constraints. If fusion startups can show credible engineering milestones, they can position themselves as strategic infrastructure plays rather than pure science experiments. The funding also highlights a broader investor pattern: concentrate capital into fewer, larger bets where technical risk is high but upside is system-level — the same logic driving massive AI infrastructure spending.

Why It Matters: Energy innovation is becoming inseparable from the AI economy’s growth limits.

Source: TechStartups.

AI security Startup depthfirst raises $40M to protect models, agents, and enterprise deployments

Security startup depthfirst announced a $40 million Series A, positioning itself at the intersection of enterprise security and AI deployment risk. As companies move from pilots to production—especially when AI agents touch internal tools and data—the attack surface expands in ways traditional security stacks weren’t designed to address.

The strategic angle is that “AI security” is no longer just about model jailbreaks or prompt injection headlines. Enterprises now worry about governance, access controls, and what happens when AI systems generate actions — not just text. Startups in this category are aiming to become the control plane for AI use within large organizations, much as cloud security platforms evolved as infrastructure moved off-premises. If depthfirst and its peers can demonstrate they reduce operational risk without slowing adoption, they may become budget line items in 2026 security planning, especially in regulated industries where AI rollouts are already under compliance scrutiny.

Why It Matters: As AI moves into core workflows, security budgets are shifting toward model- and agent-specific controls.

Source: TechCrunch.

Microsoft publishes “pay our way” plan for AI data centers as local backlash grows

Microsoft outlined a five-point plan to address community concerns about data centers: covering full electricity and infrastructure costs, minimizing and replenishing water use, training locals for jobs, avoiding tax reductions, and investing in community AI skills programs. The announcement reflects a growing political reality: data centers are increasingly facing resistance over utility costs, environmental impact, and perceived limited job creation.

This matters because the AI buildout is colliding with local governance — zoning boards, utility commissions, state tax incentives, and public sentiment. If large operators don’t establish credible “social license” frameworks, projects will face delays, moratorium threats, and higher carrying costs, slowing capacity expansion. Microsoft’s messaging also sets a standard that competitors may be pressured to match, raising the cost of entry for smaller operators and shifting the industry toward fewer, bigger players that can absorb these commitments. In a world where compute equals competitive advantage, permitting becomes a strategic bottleneck.

Why It Matters: Data centers are becoming politically contested infrastructure, and that can slow the growth of AI capacity.

Source: Axios.

Arizona lawmakers target data-center tax breaks as AI infrastructure politics intensify

Arizona lawmakers are weighing whether to roll back a lucrative tax incentive for data centers amid growing neighborhood opposition and mounting concerns about resource usage associated with AI infrastructure. The debate is a preview of what many fast-growing data-center states may face in 2026: incentives that once looked like “easy economic development” are being re-litigated as electricity and water constraints tighten.

The fight matters beyond Arizona because it tests the durability of the policy model that helped accelerate U.S. data center expansion. If key states reduce incentives, large operators may still build—but they will reprice projects, push for rate structures that protect margins, or shift deployment to more favorable jurisdictions. For startups and cloud customers, this can manifest as higher compute costs or slower regional capacity expansion. The broader implication is that AI infrastructure is increasingly subject to the same friction as other heavy industrial projects: local politics, utility planning, and environmental tradeoffs.

Why It Matters: State-level policy shifts can directly impact AI compute supply and long-term operating costs.

Source: Axios.

Pentagon adds Musk’s Grok to military AI stack, escalating politicization of model choice

The Pentagon plans to integrate Elon Musk’s Grok models into military systems, with Defense Secretary Pete Hegseth framing the decision in explicitly ideological terms. Semafor reports the move alongside the Pentagon’s existing AI relationships and internal tooling, underscoring that government buyers are increasingly treating model selection as both a technical and political decision.

The significance is twofold. First, defense adoption is a validation channel: once a model is embedded in government workflows, it can influence procurement standards and vendor ecosystems. Second, the framing signals a new fault line — “alignment” debates aren’t staying in academia or consumer apps; they are entering national security procurement. That creates reputational and compliance risks for vendors and may accelerate demand for multi-model “broker” platforms that allow agencies to swap models based on task, performance, or policy constraints. In the long run, defense AI may become a battleground for governance norms across the industry.

Why It Matters: Government AI procurement is becoming a strategic and political signal, not just a tech purchase.

Source: Semafor.

Workday finds AI time savings are real, but rework is eating nearly 40% of the gain

A Workday study found most employees report saving one to seven hours per week using AI tools — but nearly 40% of those savings are consumed by rework, such as rewriting, fact-checking, and correcting errors. The findings land at a moment when enterprises are trying to justify broad AI rollouts with productivity claims that can survive CFO-level scrutiny.

The result matters because it reframes the ROI discussion. If AI gains are partially offset by quality control, then the next wave of winners may be less about “more AI everywhere” and more about better tooling around validation, workflow integration, and domain-specific reliability. It also reinforces why companies are investing in guardrails, retrieval systems, and human-in-the-loop processes — not as optional safety add-ons, but as necessary components to turn AI into net productivity. For startups, this suggests a large opportunity in “AI operations”: monitoring, evaluation, and error reduction as a product category.

Why It Matters: Enterprise AI ROI will increasingly hinge on quality controls, not just adoption.

Source: Semafor.

Grok deepfake controls falter as users bypass safeguards amid rising legal scrutiny

The Verge reports it remains easy to bypass X’s attempts to stop Grok from generating nonconsensual sexual deepfakes — particularly “undressing” images — even as scrutiny intensifies in the UK and elsewhere. The story highlights a recurring pattern: rapid feature deployment, delayed guardrails, and an enforcement gap that regulators are increasingly unwilling to tolerate.

The broader significance is that generative image tools are colliding with real-world legal systems faster than platforms can adapt. If a platform cannot demonstrate effective technical restrictions and credible enforcement, it risks investigations, app-store pressure, advertiser flight, and potentially new statutory constraints. For the AI ecosystem, this raises the cost of shipping consumer-facing image generation at scale — especially for companies that don’t want to run heavy moderation infrastructure. It also creates openings for startups to build provenance, watermarking, and content safety tooling that work across multiple models and platforms.

Why It Matters: Weak deepfake safeguards are turning into a regulatory and distribution risk for AI platforms.

Source: The Verge.

OpenAI reportedly chose not to become Apple’s custom Siri model provider, betting on its own hardware path

The Verge cites reporting that OpenAI deliberately chose not to become Apple’s custom model provider after Apple and Google struck a deal to use Gemini for Siri. The implication is that OpenAI is prioritizing long-term control over its product direction—including its own AI hardware ambitions—rather than embedding deeply within another company’s ecosystem.

This matters because the AI platform wars are increasingly about distribution and defaults. Apple can anoint winners by selecting which model sits behind billions of devices, and opting out of that path suggests OpenAI believes it can build a broader, more independent distribution channel — or that the constraints of being Apple’s “invisible engine” aren’t worth it. It also reinforces the strategic importance of hardware and vertical integration: companies that control devices, OS layers, or dedicated inference hardware can dictate economics and user experience. For startups, it’s a reminder that partnerships with platform giants come with tradeoffs that can define your trajectory.

Why It Matters: The biggest AI advantages may come from distribution and hardware control, not just model quality.

Source: The Verge.

AI Startup Parloa raises $350M, triples valuation to $3B as Europe’s enterprise AI winners emerge

German AI startup Parloa raised $350 million, tripling its valuation to $3 billion as it deepens its enterprise customer service automation. The company is pitching AI agents that handle voice-based interactions for large clients and is leaning into a multi-model approach so enterprises can choose different underlying models for different tasks.

The funding matters because it signals investor appetite for AI companies with measurable enterprise pull — not just flashy demos. Parloa’s growth narrative also reflects a broader European trend: startups building practical AI workflow products that can win globally, even as they compete with US hyperscalers and Chinese model builders. As enterprise buyers become more cautious about vendor lock-in and regulatory exposure, multi-model and compliance-forward architectures may be a competitive differentiator. For the market, this round is another sign that “AI application layer” winners can still raise huge checks — but increasingly only when they show strong revenue signals and defensible customer footholds.

Why It Matters: Late-stage capital is flowing to enterprise AI startups that show real adoption and revenue durability.

Source: Financial Times.

Belgian cybersecurity Startup Aikido hits unicorn status with $60M Series B

Belgian cybersecurity startup Aikido Security raised a $60 million in Series B funding at a $1 billion valuation, becoming one of Europe’s newest security unicorns. The company is positioning its platform as developer-centric security “guardrails” that help teams identify and manage risks as software development accelerates — including the security challenges introduced by AI-assisted coding.

The significance is that security budgets continue to grow even as other enterprise categories face tighter scrutiny, and developer-first security is becoming a major buying pattern. As code is produced faster—often with AI assistance—the risk of shipping vulnerabilities increases, prompting organizations to adopt tools that integrate directly into developer workflows rather than relying solely on downstream security gates. Aikido’s round also highlights a broader European strength: building credible security businesses that can sell into the US market. For founders, it’s a reminder that “boring” security tooling can still become a breakout story when the market tailwind is strong.

Why It Matters: The faster software gets built (especially with AI), the more valuable developer-native security becomes.

Source: TechStartups.

Bandcamp bans music made “wholly or substantially” with generative AI, drawing a bright line for creators

Bandcamp published a formal policy tightening restrictions on generative AI content, stating it will remove music or audio created entirely or heavily with AI tools and encouraging users to report suspected AI-generated uploads. The company framed the move as protecting the human connection central to its marketplace model, where fans directly support artists.

This matters because music platforms have struggled to consistently respond to the flood of low-effort AI-generated audio and impersonation content. Bandcamp is taking a clear stance that differs from more incremental approaches elsewhere, effectively turning AI provenance into a platform governance issue. For creators, the decision may strengthen trust that discovery isn’t being overwhelmed by synthetic catalogs. For the broader tech ecosystem, it’s another case study in how platforms will define “acceptable AI use” in practice — and how policy choices can become product differentiation. It also highlights the growing market for detection, labeling, and rights infrastructure to help platforms enforce rules at scale without overremoving legitimate content.

Why It Matters: Platform rules around generative content are quickly becoming a competitive and trust signal.

Source: Bandcamp (official blog).

French regulator fines telecom subsidiaries $48M after breach, escalating compliance pressure in Europe

France’s data regulator issued $48 million in fines against telecom subsidiaries following a data breach, according to The Record. The enforcement action underscores Europe’s increasingly aggressive stance on privacy and incident accountability—not only requiring disclosure but also imposing substantial financial penalties when protections fall short.

The bigger story is that cybersecurity failures are no longer “IT problems” that end with a remediation plan. Regulators are treating breaches as governance failures tied to compliance, operational controls, and risk management — and the costs can be material. For tech companies and startups selling into Europe, this underscores the importance of security-by-design, vendor risk management, and evidence of controls that can withstand regulatory scrutiny. It also shifts procurement behavior: enterprise buyers increasingly demand proof that vendors can meet regulatory expectations, not just deliver features. The result is a more security- and compliance-driven go-to-market environment across the region.

Why It Matters: European enforcement is raising the financial and operational cost of weak security controls.

Source: The Record.

Wrap Up

That’s your tech briefing for today — we’ll be back tomorrow with the stories shaping the future of the industry. As AI infrastructure scales to utility-level power, regulators tighten their grip, and startups secure record funding, the tech industry is entering a phase where execution, governance, and real-world impact matter more than hype. From chip policy and data-center politics to cybersecurity enforcement and creator protections, today’s stories show how deeply technology is now woven into global systems. We’ll keep tracking the moves that shape what comes next — and how they affect founders, investors, and everyday users alike.

That’s your quick tech briefing for today. Follow us on X @TheTechStartups for more real-time updates