Nvidia strikes $20B licensing and asset deal with AI chip startup Groq, acqui-hires founder Jonathan Ross

Nvidia has struck a sweeping $20 billion licensing and asset deal with AI chip startup Groq, according to an exclusive report from CNBC, signaling how inference has moved to the center of the artificial intelligence race.

The agreement gives Nvidia access to Groq’s inference technology and key intellectual property, while bringing Groq founder and CEO Jonathan Ross, president Sunny Madra, and several senior leaders into Nvidia’s ranks. Groq will continue operating as an independent company, with its cloud business remaining separate.

Groq confirmed the agreement in an announcement on Wednesday, saying, “Today, Groq announced that it has entered into a non-exclusive licensing agreement with Nvidia for Groq’s inference technology. The agreement reflects a shared focus on expanding access to high-performance, low-cost inference.”

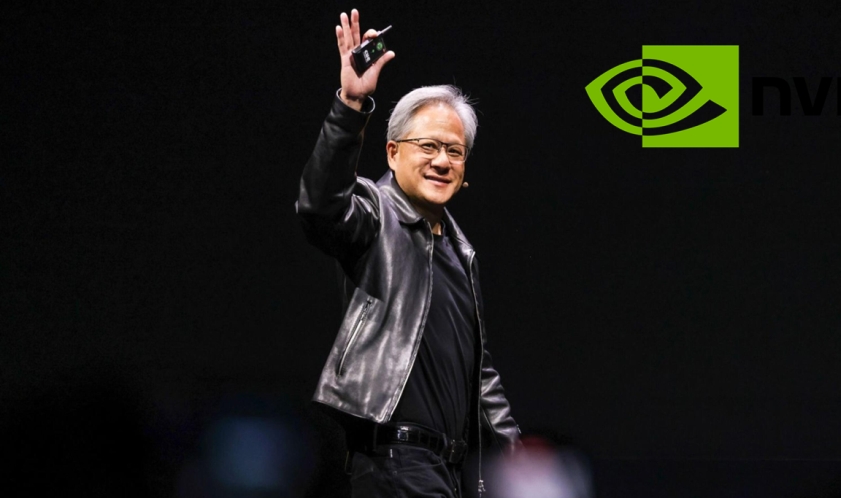

The structure of the deal reflects a familiar pattern that’s taking hold across Big Tech. Nvidia is not acquiring Groq as a company, but it is absorbing much of what made the startup valuable in the first place: the technology and the people behind it. Nvidia CEO Jensen Huang made that distinction clear in an internal memo to employees, writing, “While we are adding talented employees to our ranks and licensing Groq’s IP, we are not acquiring Groq as a company.”

Why Nvidia’s New Groq Deal Shows Inference Is the Next AI Battleground

Groq, founded in 2016 by former Google engineers, has focused on a narrow but increasingly important slice of AI infrastructure. Its language processing unit chips are built for inference, the stage where trained models generate answers, predictions, and outputs in real time. That work has become central as AI systems move from training labs into everyday products. Ross has long argued that inference, not training, will define the era, saying, “Inference is defining this era of AI, and we’re building the American infrastructure that delivers it with high speed and low cost.”

The startup’s chip design relies on embedded memory, a choice Ross has said allows Groq’s processors to be produced and deployed faster while using less energy than traditional GPUs. That pitch has resonated with customers and investors. In September, Groq raised $750 million at a valuation of roughly $6.9 billion in a round led by Disruptive, with backing from BlackRock, Neuberger Berman, Samsung, Cisco, Altimeter, and 1789 Capital.

Alex Davis, CEO of Disruptive, said Nvidia approached Groq while the company was not seeking a sale. He told CNBC that Nvidia is acquiring Groq’s core assets, excluding GroqCloud, the startup’s cloud service that sells access to its inference hardware. Groq confirmed that GroqCloud will continue operating “without interruption” under new CEO Simon Edwards, the company’s former finance chief.

For Nvidia, the deal marks its largest transaction on record by dollar value. The chipmaker’s previous biggest acquisition was its $7 billion purchase of Mellanox in 2019. Nvidia ended October with more than $60 billion in cash and short-term investments, giving it ample room to pursue large strategic bets as competition in AI hardware intensifies.

Huang said the agreement will fold Groq’s low-latency processors into Nvidia’s broader platform. “We plan to integrate Groq’s low-latency processors into the NVIDIA AI factory architecture, extending the platform to serve an even broader range of AI inference and real-time workloads,” he wrote.

Ross, who studied under AI pioneer Yann LeCun and helped create Google’s Tensor Processing Unit, said on LinkedIn that he is joining Nvidia to help integrate the licensed technology. The move returns one of the architects of modern AI accelerators to a company that already dominates the market for advanced AI chips.

The Groq deal follows a series of similar arrangements across the industry. Nvidia paid more than $900 million last year to hire executives from Enfabrica and license its technology. Meta, Google, and Microsoft have each used licensing agreements and talent transfers to lock in AI expertise without full acquisitions. The pattern reflects both regulatory caution and the urgency to secure scarce engineering talent.

The Groq agreement fits a broader pattern taking shape across Big Tech. Meta Platforms committed $14 billion to Scale AI, a deal that brought the startup’s CEO inside the company to help steer its AI push, the Wall Street Journal reported. Google followed a similar path last year, licensing technology from Character.AI while hiring several of its top executives. Microsoft has used the same playbook, striking a licensing-and-talent deal with Inflection AI.

Groq’s trajectory mirrors that of several AI chip startups that have gained attention during the AI boom. Cerebras Systems, another Nvidia challenger, filed for an IPO before pulling back amid shifting market conditions. Demand for inference hardware continues to rise as companies deploy large language models at scale, spurring interest in alternatives that promise lower latency and energy consumption.

Nvidia shares were little changed after the news, even as the company’s stock remains up sharply this year. The muted reaction suggests investors see the Groq deal less as a departure and more as a continuation of Nvidia’s strategy: spend aggressively, lock in talent, and widen its lead as AI infrastructure becomes a core layer of the global economy.

Groq Founders