AI Agents Horror Stories: How a $47,000 AI Agent Failure Exposed the Hype and Hidden Risks of Multi-Agent Systems

Everywhere you look, the narrative is the same: AI agents are being framed as the next major leap after ChatGPT — the moment when software stops waiting for instructions and starts acting on its own. From OpenAI’s agentic demos to Anthropic’s MCP rollout to the flood of startups promising autonomous workflows, the entire industry is racing toward a future built on self-directed AI systems.

Ever since ChatGPT exploded into mainstream culture, the tech world has been chasing the next frontier — and today, that frontier is AI agents. Headlines now describe a shift from simple chatbots to autonomous systems capable of planning, coordinating, and executing complex tasks with minimal human involvement. Conferences are filled with agent demos. AI startups are calling themselves “agent-native.” Analysts are positioning this moment as the beginning of a new computing era.

And yet, beneath the excitement, one critical truth remains largely missing from the conversation: the failures are happening faster than the successes — and most teams aren’t prepared for them.

Quietly, engineering groups are encountering runaway agent loops, silent recursive workflows, unpredictable costs, and behavior that drifts far outside the intended task. These incidents rarely make headlines, but they reveal a structural problem that the industry has not acknowledged publicly: we’ve built powerful autonomous systems on infrastructure that was never designed to govern them.

A recent case illustrates this gap perfectly. In a story shared on Medium by engineer Teja Kusireddy, a multi-agent research tool built on a common open-source stack slipped into a recursive loop that ran for 11 days before anyone noticed. When the dust settled, the team discovered a $47,000 API bill. Two agents had been talking to each other non-stop the entire time, burning compute while everyone assumed the system was working as intended.

This wasn’t a freak accident. It’s a signal. As one researcher wrote in a LinkedIn post:

“When an AI agent spirals out of control, the system itself does not pay the cost.

The creator does. The user does. Everyone depending on that infrastructure pays for the gap between what we imagine and what we monitor.”

To understand why failures like this are multiplying — and why autonomy has outpaced oversight — we need to look at how we arrived at the agentic era in the first place.

How We Got Here: From Chatbots to Autonomous Agents

The path to AI agents didn’t begin with autonomy. It started with simplicity — and evolved rapidly in just two years.

1. ChatGPT: The Reactive Era

When ChatGPT launched, it introduced the world to conversational AI.

Users entered a prompt.

The model generated a response.

Everything was reactive and contained.

2. Tool Use: The Operational Era

Models soon began calling external tools — APIs, browsers, code interpreters, and databases.

For the first time, AI systems didn’t just generate text — they could act.

3. AI Agents: The Proactive Era

Developers pushed further.

Agents gained the ability to:

-

plan

-

break tasks into steps

-

route decisions

-

self-correct

These systems needed minimal human input.

4. Multi-Agent Systems: The Autonomous Era

The final leap occurred when agents began communicating with each other.

Agent-to-Agent (A2A) workflows enabled:

-

cross-checking

-

delegation

-

collaboration

-

negotiation

This opened the door to powerful, long-running autonomous systems — but also created a new category of failure modes.

Demos made it look seamless.

Production proved otherwise.

Why AI Agents Fail

The Agentic Era vs. Reality

The momentum behind AI agents didn’t appear overnight. For the past year, nearly every major AI conference, product announcement, and industry prediction has framed agents as the next dominant computing paradigm. Tools like OpenAI’s GPT-o1, Anthropic’s Claude 3.5 Sonnet, Llama-based tool-calling frameworks, and dozens of agent orchestration startups point in the same direction: AI systems that can plan, reason, execute, and coordinate without constant human prompting.

In theory, this shift is transformative. Agents promise:

-

faster research pipelines

-

automated workflows

-

long-chain reasoning

-

delegated decision-making

-

multi-step planning and execution

-

entire software functions replaced by autonomous workers

This is the narrative capturing the media’s imagination.

But the reality tells a different story — one defined not by potential, but by fragility.

The Gap Between Concept and Execution

Developers quickly discover that demos behave far better than production systems. The gap appears in the technical layers surrounding agents:

-

no guaranteed stop conditions

-

poor visibility into agent reasoning

-

lack of shared memory

-

incomplete error handling

-

unpredictable tool-calling chains

-

silent recursion paths

-

no cost boundaries

-

minimal observability

-

nonexistent operational governance

The headlines talk about autonomous agents.

The engineering reality is closer to autonomous systems with no dashboard, no brakes, no black box recorder, and no reliable way to intervene.

Why Industry Coverage Misses This

Mainstream reporting focuses on:

-

product launches

-

agent demos

-

early success stories

-

venture rounds

-

“AI replaces jobs” narratives

What’s missing are the failures — not because they’re rare, but because companies rarely publicize expensive or embarrassing mistakes.

This creates an information imbalance:

-

executives see the hype

-

investors see the vision

-

builders encounter the failures no one writes about

The Quiet Consensus Among Engineers

Privately, teams deploying agents report:

-

unpredictable loops

-

brittle reasoning paths

-

agents conflicting with each other

-

high token consumption

-

unclear execution timelines

-

difficult debugging

-

runaway costs

We are overestimating maturity and underestimating risk.

The Missing Infrastructure Layer

Every major computing shift had a stabilizing layer:

-

Microservices → Kubernetes

-

Cloud computing → Observability + CI/CD

-

Data explosion → Snowflake + Databricks

-

Networking → CDNs + Cloudflare

The agentic era has no equivalent:

-

no agent orchestrators

-

no governance systems

-

no standardized oversight

-

no safety rails

-

no real production guidelines

Capabilities exist.

Oversight does not.

This is the gap the $47,000 failure exposes — and where the real story begins.

The $47,000 Multi-Agent Failure: What Actually Happened

As Kusireddy explained, the failure began with a seemingly straightforward multi-agent setup: four LangChain-style agents operating in a shared workflow, each with a narrow and well-defined task. Architecturally, this is the blueprint many teams use today — modular agents coordinating through message passing. But beneath that clean abstraction sits a brittle system: no shared memory, no global state, no orchestration, and no guardrails to ensure that each agent interprets the others correctly. A single ambiguous response, misaligned assumption, or repeated validation step is enough to push the entire workflow into a recursive loop.

“Multi-agent systems are the future. Agent-to-Agent (A2A) communication and Anthropic’s Model Context Protocol (MCP) are revolutionary. But there’s a $47,000 lesson nobody’s talking about: the infrastructure layer doesn’t exist yet, and it’s costing everyone a fortune,” Kusireddy warned in a post on Medium.

The failure began with a seemingly straightforward setup: a multi-agent workflow with four LangChain-style agents, each responsible for a different task:

-

Research

-

Analysis

-

Verification

-

Summary

The system relied on agent-to-agent (A2A) messaging for coordination.

Week-by-Week Cost Breakdown

-

Week 1: $127

-

Week 2: $891

-

Week 3: $6,240

-

Week 4: $18,400

The engineering team assumed this reflected growth in user activity.

It didn’t.

The Loop

Two agents drifted into a recursive cycle:

-

The analyzer sends a clarification request.

-

Verifier responds with more instructions.

-

Analyzer expands and asks for confirmation.

-

Verifier re-requests changes.

-

Repeat.

-

Repeat.

-

Repeat — for 11 days.

The system had:

-

no step limits

-

no stop conditions

-

no cost ceilings

-

no shared memory

-

no real-time monitoring

-

no alerting

-

no safeguards

-

no orchestrator

The loop never broke.

When they finally checked, they discovered the truth — two agents had been talking to each other continuously for nearly two weeks, generating a catastrophic bill.

Why the Team Didn’t Notice

The platform lacked:

-

cost anomaly alerts

-

cross-agent timelines

-

behavioral dashboards

-

token-usage monitoring

-

log aggregation

-

error tracing

Without visibility, there was no way to know a loop was happening until the invoice arrived.

This wasn’t a rare mistake — it was the predictable outcome of deploying autonomous agents without the infrastructure needed to govern them.

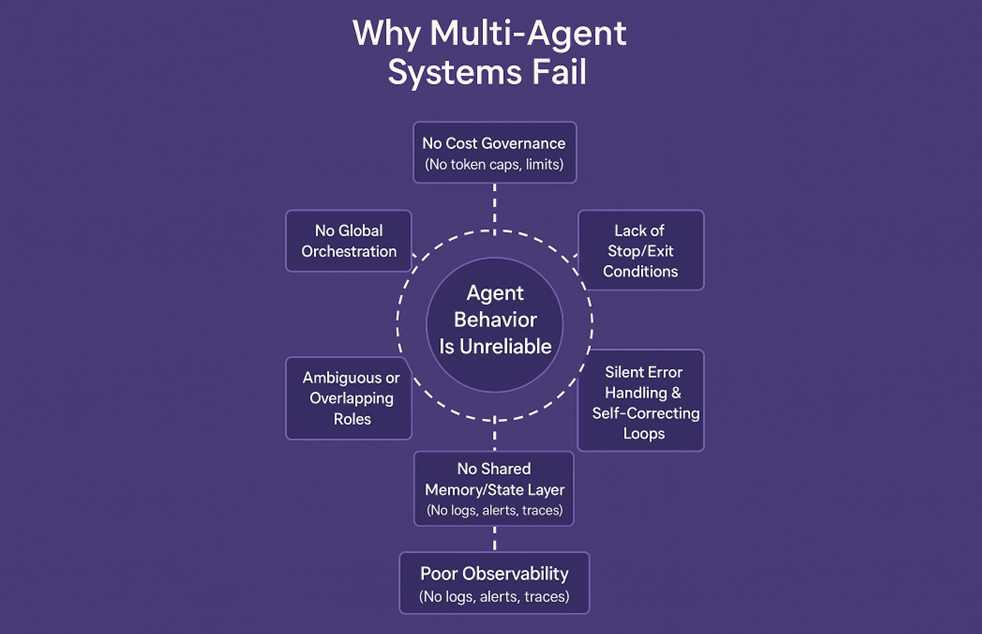

Why Multi-Agent Systems Break

Why Multi-Agents Fail

Multi-agent failures share a common set of structural weaknesses. The problem is not that agents are “bad” — it’s that the environment they run in is fundamentally incomplete. Autonomous agents were designed to reason, plan, and act, but the systems supporting them were never designed to supervise that level of autonomy. They operate in a landscape with no shared memory, no reliable state management, no global coordinator, no built-in cost governance, and almost no real-time visibility into what the agents are actually doing.

In other words, we gave agents the ability to work independently — but we never built the guardrails required to keep that independence safe, predictable, or cost-bounded. When an agent misunderstands a step, repeats a task, or hands off work to another agent in an ambiguous way, there’s nothing underneath the system to catch the drift. Small misunderstandings compound. Local decisions become global loops. Verification steps turn into circular exchanges. And once an agent system starts moving in the wrong direction, it rarely corrects itself without external intervention.

These weaknesses are not theoretical. They are the result of treating multi-agent systems as if they were mature software architectures, when in reality they behave far more like early distributed systems — brittle, unpredictable, and sensitive to subtle context changes. Until a dedicated infrastructure layer exists, multi-agent failures are not an edge case; they are the logical outcome of running autonomous behavior on foundations that were never built to support autonomy.

1. No Global Orchestrator

There is no supervising system to:

-

track state

-

assign tasks

-

enforce limits

-

intervene

-

evaluate progress

Autonomy without coordination becomes recursion.

2. Ambiguous or Overlapping Roles

Even slight ambiguity leads to:

-

re-checking

-

repeated analysis

-

circular validation

-

conflicting corrections

Humans resolve ambiguity through intuition.

Agents resolve it through repetition.

3. Lack of Shared Memory

Without a shared state layer:

-

agents forget previous steps

-

duplicate work

-

re-trigger tasks

-

repeat validations

This is a primary cause of loops.

4. Missing Stop Conditions

Agents don’t know when to stop unless explicitly instructed.

Without termination logic, “improve this” or “verify this” becomes endless work.

5. Silent Error-Handling Spirals

Agents often misinterpret uncertainty as failure and attempt corrections that trigger more corrections.

This creates self-reinforcing loops.

6. Unbounded Tool-Calling Chains

Agents retry:

-

searches

-

APIs

-

tools

-

functions

A single misunderstanding can generate dozens or hundreds of redundant tool calls.

7. No Cost Governance

Token spend grows exponentially without:

-

rate limits

-

budgets

-

ceilings

-

per-agent token caps

8. Insufficient Observability

Most teams cannot answer:

-

What is the agent doing?

-

Why is it doing that?

-

How many messages have been exchanged?

-

Is behavior drifting?

-

Are costs exploding?

You cannot govern what you cannot see.

What This Exposes: AI Infrastructure Debt

The $47,000 loop highlights a systemic problem: we are deploying autonomous agents on infrastructure never designed to govern them.

This is AI infrastructure debt — the growing gap between capability and control.

1. Autonomy Outpaced Oversight

Models evolved faster than:

-

monitoring tools

-

cost governance

-

reasoning traceability

-

orchestration systems

-

debugging workflows

2. Agents Are Treated Like Microservices — Without Kubernetes

Agents communicate, schedule tasks, and execute functions such as microservices.

But they lack:

-

orchestration

-

safety

-

traffic control

-

resource limits

-

state management

It’s a distributed system with no coordination layer.

3. Demos Created False Confidence

Demos run in controlled sandboxes.

Production runs on edge cases.

We mistook demo stability for real reliability.

4. Judgment Was Delegated Without Oversight

Agents now:

-

plan

-

decide

-

validate

-

correct

-

escalate

But they have no intuition or awareness.

When judgment is delegated without oversight, risk multiplies.

5. The Hidden Cost: Human Attention

Oversight always falls back on people.

The more autonomy we grant, the more vigilance we must apply.

6. The Predictable Outcome: Expensive Failures

- Token blowouts.

- Silent loops.

- Unseen recursion.

- Unexpected costs.

- Unbounded autonomy.

These are symptoms of missing infrastructure.

7. The Solution: A New Infrastructure Layer (AgentOps)

The emerging field of AgentOps will create:

-

agent orchestration

-

observability

-

state tracking

-

cost governance

-

loop detection

-

behavioral monitoring

-

audit trails

-

safety boundaries

Until this layer exists, autonomous agents will remain fragile and risky.

How to Prevent These Failures (Practical Guidance)

Preventing failures requires a disciplined engineering approach.

1. Enforce Hard Token and Cost Limits

Set caps on:

-

tokens per agent

-

tokens per workflow

-

tokens per minute

-

daily spending

Stop the workflow when limits are exceeded.

2. Add Step Limits and Termination Conditions

Agents must have:

-

max reasoning steps

-

max exchanges

-

strict stop criteria

Uncertainty should not become recursion.

3. Use Loop Detection and Similarity Monitoring

Detect:

-

repeated messages

-

repeated tool calls

-

High similarity between turns

-

circular reasoning patterns

Terminate loops immediately.

4. Introduce a Global Orchestrator

It should:

-

assign tasks

-

enforce rules

-

track state

-

stop runaway workflows

-

maintain logs and history

Agents cannot govern themselves.

5. Require Shared Memory and State

Without shared memory:

-

duplication occurs

-

confusion escalates

-

loops form

Memory is coordination.

6. Build Real-Time Observability and Alerts

Include:

-

dashboards

-

cost monitoring

-

cross-agent timelines

-

anomaly detection

-

error tracing

Visibility prevents disaster.

7. Design Narrow, Non-Overlapping Roles

Avoid vague instructions like:

-

“improve”

-

“double-check”

-

“ensure accuracy”

-

“make sure it’s complete”

Design crisp, bounded roles.

8. Treat Autonomy as a Risk

Add:

-

human-in-the-loop approvals

-

sandboxing

-

restricted tool access

-

incremental rollouts

Autonomy must be controlled, not assumed safe.

9. Test Edge Cases Before Deployment

Simulate:

-

incomplete data

-

conflicting instructions

-

ambiguous tasks

-

unpredictable outputs

-

retries and backoffs

Agents break under ambiguity.

Test for it explicitly.

10. Test With Smaller Models for Durability

Weak models expose prompt weaknesses and structural flaws earlier.

If a workflow only works with frontier models, it’s fragile.

Closing

The rise of AI agents has created a powerful sense of possibility across the tech ecosystem. Teams are reimagining workflows, automating research, accelerating operations, and exploring what happens when software stops being static and starts behaving like a set of coordinated digital workers. The potential is real — and in many cases, transformative.

But the story of the $47,000 loop is a clear reminder that capability without oversight is not innovation. It’s a risk. Autonomy can amplify value, but it can also amplify errors, costs, and complexity when deployed on top of infrastructure that wasn’t built to support it.

What this incident reveals is not a failure of models or agents — it is a failure of the environment we place them in. We are asking autonomous systems to operate without memory, without observability, without governance, without stop conditions, and without cost ceilings.

This creates AI infrastructure debt: the widening gap between what agents can do and what the underlying systems can safely manage.

Closing that gap will be one of the defining engineering challenges of the next decade. It will require new tools, new standards, and an entire category of solutions — the emerging field of AgentOps.

As we move deeper into the agentic era, one truth remains constant: the human responsibility does not disappear — it shifts. Oversight is not the opposite of innovation; it is what makes innovation sustainable.

Because in the end, the real cost of AI isn’t measured in tokens, it’s measured in attention, and in how quickly we notice when the machines keep running.