Top Tech News Today, February 12, 2026

It’s Thursday, February 12, 2026, and here are the top tech stories making waves today — from AI and startups to regulation and Big Tech. The global tech landscape is shifting fast as AI moves from ambition to infrastructure, regulation tightens around powerful models, and capital flows reshape who can scale and who can’t. Over the past 24 hours, Big Tech has doubled down on massive data center investments, governments have pushed harder on AI oversight and national security access, and startups across AI, energy, and space have faced a sharper reality check on execution and funding.

Today’s stories capture a turning point: AI’s growth is no longer limited by ideas but by power, memory, and policy. From billion-dollar data centers and next-generation AI hardware to the politics of AI regulation, cybersecurity funding risks, and the race to operationalize AI inside real businesses, the signals are clear. The next phase of technology leadership will be decided by who can deploy responsibly, scale sustainably, and survive a far more selective market.

Below are the 15 most important global technology news stories shaping AI, startups, and the future of innovation today.

Technology News Today

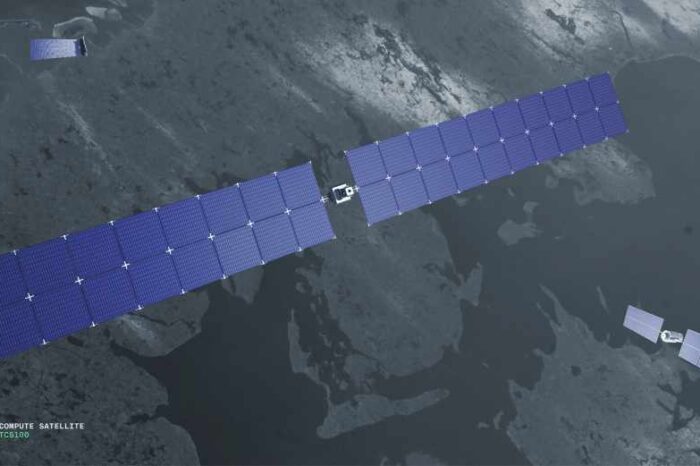

Meta’s $10B AI Data Center Push Lands in Indiana as the Compute Arms Race Accelerates

Meta has begun construction on a roughly $10 billion data center project in Lebanon, Indiana, a signal that the company is still in full “build” mode as AI demand shifts from model demos to industrial-scale infrastructure. The site is expected to come online in late 2027 or early 2028 and is designed for about 1 gigawatt of power, putting it in the same class as the mega-facilities now reshaping utility planning, land use, and regional power markets.

Why it matters goes beyond one company’s footprint. The AI boom is increasingly constrained by physical realities: grid interconnects, transmission buildouts, water availability, and local permitting battles. Meta’s move also underscores a trend in how Big Tech is deploying capital: data centers are no longer “backend IT,” they’re strategic assets that determine how fast a company can train, fine-tune, and serve frontier models at scale. And as these builds multiply, regulators and community groups are scrutinizing who pays for infrastructure upgrades, how power is sourced, and what long-term obligations companies carry after the ribbon cutting.

Why It Matters: AI competition is becoming a contest of power capacity, permitting speed, and hardware supply, not just algorithms.

Source: Reuters.

Pentagon Presses AI Leaders to Deploy on Classified Networks, Setting Up a High-Stakes Policy Clash

U.S. defense leaders are pushing major AI companies to bring their tools deeper into the national security stack, including classified networks, and to do so with fewer usage restrictions than many firms currently allow. The thrust is clear: the Pentagon wants cutting-edge commercial AI available across classification levels for mission planning and sensitive workflows, not limited to carefully sandboxed pilots.

The friction is equally clear. AI firms have been cautious about enabling certain categories of use, especially those that resemble autonomous targeting, domestic surveillance, or high-consequence decision-making. Defense officials argue the tools should be usable if they comply with U.S. law. Critics counter that “lawful” is not the same as “safe,” particularly given known issues around hallucinations, model brittleness under adversarial pressure, and the operational risks of deploying probabilistic systems in environments where mistakes can be catastrophic. The result is a looming negotiation over liability, auditability, guardrails, and what “responsible deployment” means when the customer is the military.

Why It Matters: If AI becomes embedded in classified workflows, today’s product policies could become tomorrow’s national security flashpoints.

Source: Reuters.

Anthropic Enters the 2026 Midterm Election Fight, Backing Candidates Who Want Stricter AI Rules

Anthropic is stepping directly into U.S. politics with a $20 million investment to strengthen support for tougher AI regulation and related policies, such as export controls. The move escalates a widening rift inside the AI ecosystem: one camp argues the industry needs firmer guardrails now, while another warns that a patchwork of rules (especially at the state level) could slow deployment and cede ground to overseas competitors.

This isn’t just a philosophical dispute. It’s about who sets the rules for model accountability, disclosure, safety testing, and the allowable use of AI in sensitive domains. It’s also about the political economy of AI: companies with the most compute and distribution can often adapt more quickly to regulation, while smaller startups may struggle with compliance burdens. At the same time, in the absence of guardrails, the costs of harm—fraud, deepfake abuse, discriminatory decision-making—are shifted to consumers, schools, courts, and local governments. Anthropic’s bet is that shaping the policy environment now is less risky than trying to retrofit trust later.

Why It Matters: AI regulation is becoming an election issue, and the industry is now funding both sides of the debate.

Source: The Wall Street Journal.

Samsung Claims Early Lead in HBM4 Shipments as AI Memory Becomes the New Bottleneck

Samsung says it has started commercial shipments of HBM4, the next-generation high-bandwidth memory designed for the AI accelerator era. The company didn’t name the customer, but the competitive subtext is obvious: advanced memory is one of the most constrained inputs in the AI supply chain, and winning “design-in” status on top accelerators can translate into years of durable revenue.

HBM has become critical because modern AI training and inference increasingly hit memory bandwidth and capacity limits, not just raw compute. If a GPU can’t be fed fast enough, expensive silicon sits idle. That’s why HBM positioning is now strategic for both chipmakers and cloud operators: it determines the throughput of training clusters, the economics of inference, and the availability of next-gen instances. Samsung’s update also matters geopolitically. The AI supply chain is already a pressure point in export controls and industrial policy; leadership in HBM manufacturing can shift leverage across Korea, the U.S., China, and Europe as governments compete to localize critical components.

Why It Matters: In AI hardware, memory is increasingly the choke point—and HBM leadership can reshape who captures the profits.

Source: Bloomberg.

Check Point Hits Record Billings, Framing 2026 as the Year Enterprises Must Secure AI Rollouts

Check Point reported record billings of around $1 billion, pointing to growing enterprise demand tied to AI-driven change across corporate networks. The company’s message is that AI adoption is expanding the attack surface—more APIs, more identity sprawl, more third-party tools, and more sensitive data moving through systems that weren’t designed for this pace of automation.

This is part performance story, part market signal. Security leaders are dealing with two simultaneous shifts: (1) traditional threats getting cheaper and faster thanks to automation, and (2) internal AI deployments creating new governance gaps—shadow AI usage, data leakage risks, and model supply-chain concerns. For large firms, the security conversation is no longer “should we allow AI,” but “how do we allow it without creating uncontrolled risk?” That’s a tailwind for vendors that can package controls in a way that fits procurement reality: compliance mapping, centralized policy, measurable risk reduction, and integrations that don’t require a rip-and-replace.

Why It Matters: As AI moves into core workflows, security spending is becoming a prerequisite for adoption, not an afterthought.

Source: Bloomberg.

Samsung Teases Galaxy S26 Cycle With Bigger Trade-In Credits as “Galaxy AI” Becomes the Main Pitch

Ahead of its February 25 Unpacked event, Samsung is dangling unusually high incentives—up to $900 in trade-in credits—while signaling that the product story will revolve heavily around its AI software layer. That’s telling: phone makers are leaning on AI features to create differentiation in a mature hardware market where year-over-year spec bumps are less compelling to many buyers.

The broader trend is that consumer hardware is being rebranded as “AI-first,” with a focus on on-device summarization, smarter photo/video workflows, context-aware assistants, or tighter app integration. The question is what customers will pay for. Some features meaningfully reduce friction; others feel like a marketing wrapper around capabilities that remain inconsistent or gated behind subscriptions. Samsung’s trade-in push suggests it expects intense competitive pressure in 2026, especially as Apple, Google, and Chinese OEMs race to control the “assistant layer” between users and their apps.

Why It Matters: Smartphones are turning into the next battleground for AI distribution—and trade-in economics are becoming part of the AI strategy.

Source: The Verge.

UK Space Startup Orbex Nears Collapse, Spotlighting How Brutal the Launch Market Has Become

Orbex, a Scottish rocket startup, is reportedly on the verge of collapse despite receiving £26 million in taxpayer-backed loans. The company had pitched itself as part of a new wave of domestic launch capability for the UK and Europe, aiming to deliver small satellites to orbit with a “lower-carbon” approach and launches from Scotland. But repeated delays and funding setbacks appear to have pushed the company toward the edge.

The failure mode here is familiar in frontier tech: hardware timelines slip, costs compound, and capital markets tire of open-ended execution risk. Space is especially unforgiving because the “demo” moment is not a product video—it’s a live launch with minimal tolerance for error. Orbex’s struggles also underline Europe’s broader challenge: building competitive launch and manufacturing capacity while facing tighter venture conditions and intense competition from SpaceX and increasingly capable players in Asia. Governments want strategic capability, but public funding rarely covers the full financing gap required to reach operational cadence.

Why It Matters: Space is entering an era where only teams with capital depth, manufacturing discipline, and launch cadence survive.

Source: The Guardian.

OpenAI’s Chief Economist Says AI’s Biggest 2026 Problem Isn’t Models—It’s Adoption Inside Companies

At a workplace-focused conference, OpenAI’s chief economist highlighted a reality many executives recognize: the gap between what models can do and how organizations actually use them is still wide. Workers often assign AI tasks that are too small (basic search) or too big (asking for end-to-end solutions without structure), creating a “Goldilocks” adoption problem where value is missed on both ends.

This matters because the next phase of AI is less about headline-grabbing model releases and more about operational integration: workflow redesign, training, data governance, and, honestly, measuring productivity gains. Enterprises are also learning that “AI rollout” is not a single project. It becomes a portfolio of changes across customer support, sales ops, finance, engineering, and compliance, each with its own failure modes. The winners won’t just have access to AI; they’ll have playbooks for change management, policies that reduce risk without blocking experimentation, and internal tools that make correct usage the default. That’s where startup opportunity lives too—products that help companies reliably extract value, not just experiment.

Why It Matters: The AI winners in 2026 may be the firms that operationalize adoption, not the ones that merely buy access to models.

Source: Semafor.

Ex-Google Exec Launches Industrial AI Startup Focused on Oil Refineries and Chemical Plants

A former senior Google leader has launched a new AI company aimed at oil refineries and chemical plants, betting that specialized industrial environments remain underserved by general-purpose AI platforms. These facilities are complex, safety-critical, and expensive to operate—meaning even modest efficiency or reliability gains can translate into real dollars.

Industrial AI is also structurally different from consumer AI. Data is messy and often locked behind legacy systems. Workflows are governed by safety constraints and regulatory standards. And the upside is tied to operational outcomes: reduced downtime, fewer incidents, tighter process control, and more predictive maintenance. Startups that succeed here tend to win by combining deep domain knowledge with credible deployment discipline, not by shipping flashy demos. The broader implication is that AI’s next growth wave may come from “boring” sectors—energy, manufacturing, logistics—where budgets exist, and the ROI case can be proven in hard metrics.

Why It Matters: Vertical AI is moving into high-stakes industrial environments where accuracy and reliability matter more than novelty.

Source: Semafor.

Uber Eats Rolls Out an AI Grocery Assistant as Delivery Apps Chase Higher-Margin Baskets

Uber Eats is rolling out an AI assistant designed to help users with grocery shopping, a sign that delivery platforms are trying to reduce friction in categories where selection and substitution decisions can be annoying. Groceries also have a different economic profile than restaurant delivery: larger baskets, more repeat purchases, and greater potential for deeper retailer partnerships.

This is another example of AI shifting from “chat” to “commerce enablement.” The practical value isn’t philosophical; it’s whether the assistant can reliably handle preferences, brands, dietary needs, and real-time inventory constraints without creating customer service headaches. If it works, it can increase conversion, reduce cart abandonment, and improve satisfaction during out-of-stock substitutions—one of the most common points of friction in grocery delivery. It also opens the door to a new layer of competition: if assistants become the shopping interface, platforms that control the assistants can influence what gets recommended, how deals are presented, and which private-label products are surfaced.

Why It Matters: AI is becoming the new storefront for everyday purchases—and the assistant layer may decide who wins consumer attention.

Source: Axios.

NIST Funds Quantum-and-AI Small Businesses, Signaling Continued U.S. Emphasis on Frontier Tech Supply Chains

The National Institute of Standards and Technology (NIST) is allocating about $3.19 million across eight Phase II SBIR projects spanning areas including AI, semiconductors, biotech, and quantum information science. While the dollar amount is modest relative to Big Tech capex, SBIR Phase II support often functions as an important bridge from lab validation to prototype and early commercialization.

The broader signal is that governments are treating quantum and advanced AI as strategic technologies, not just academic pursuits. Funding programs like this also shape ecosystems: they help small firms attract follow-on capital, hire specialized talent, and partner with universities or primes. In quantum specifically, progress is increasingly tied to engineering execution—control systems, error mitigation, fabrication, and packaging—not just theoretical breakthroughs. For startups, non-dilutive funding can be the difference between surviving the long path to product-market fit and getting stuck in perpetual “pilot” mode.

Why It Matters: Frontier tech commercialization is being nudged by targeted public funding that helps startups cross the prototype gap.

Source: Quantum Computing Report.

Fusion Startup Inertia Raises Another $450M, Keeping Investor Appetite Alive for “Post-AI” Energy Bets

Inertia Enterprises, a laser-fusion startup with ties to the U.S. national lab ecosystem, has raised an additional $450 million. The funding suggests that—even as AI absorbs attention and infrastructure spending—capital is still flowing into long-horizon energy technologies that could reshape power generation if the physics and engineering can be made commercially repeatable.

Fusion is an extreme version of frontier tech: timelines are long, technical uncertainty is high, and commercialization requires not only scientific milestones but manufacturing scalability, regulatory pathways, and grid integration. Yet the demand driver is real. AI data centers, electrification, and national resilience goals are intensifying the search for reliable, high-density, low-carbon power. Investors appear to be making a portfolio bet that at least a handful of fusion approaches can reach viable pilot plants, even if many won’t. For the startup ecosystem, this also creates second-order opportunities in enabling technologies such as lasers, superconducting magnets, materials science, simulation, and high-performance power electronics.

Why It Matters: The power demands of AI and electrification are keeping “moonshot energy” investments on the table.

Source: Photonics.

Microsoft Tests High-Temperature Superconductors for Data Centers as Grid Constraints Become a Core AI Problem

Microsoft is exploring high-temperature superconductors (HTS) to reduce energy losses and shrink the physical footprint required for power delivery in data centers. The company’s framing is pragmatic: as data center demand grows, the bottleneck isn’t only chips—it’s how efficiently facilities can pull, distribute, and manage electricity without overburdening local grids.

While this is still an exploration rather than a finished deployment, it reflects how hyperscalers are moving “down the stack” into grid engineering. HTS systems can reduce transmission losses and help stabilize power delivery during peak demand, which matters when a single facility’s load rivals that of a small city. If approaches like this mature, they could reshape data center design standards and influence how utilities plan upgrades. For startups, it’s another sign that the “AI infrastructure” market is broadening into power hardware, cooling, demand-response software, and energy procurement—areas where innovation can be just as defensible as model IP.

Why It Matters: Solving AI scale increasingly means solving power delivery, not just building faster GPUs.

Source: Microsoft Azure Blog.

DHS Funding Deadline Could Sideline U.S. Cyber Defense Programs, CISA Warns

U.S. cyber defense could take a direct operational hit if Congress fails to extend funding ahead of a Department of Homeland Security deadline, according to warnings tied to CISA’s staffing and program continuity. The risk is straightforward: furloughs and paused programs can slow incident response readiness and reduce support for state and local partners at exactly the wrong time, as ransomware and supply-chain threats remain persistent.

This story matters because cybersecurity capacity is not a switch you flip on and off without consequences. Response teams require continuity, institutional knowledge, and active coordination with private-sector critical-infrastructure operators. Even short disruptions can create gaps in monitoring, training, and preparedness, especially when adversaries track public signals of reduced capacity. For the broader tech ecosystem, it’s also a reminder that cybersecurity is now intertwined with governance: budgeting decisions and political standoffs can translate into real exposure, including delayed advisories, slower coordination, and reduced help for organizations facing active intrusions.

Why It Matters: Cyber resilience depends on continuity—and funding lapses can create openings adversaries don’t ignore.

Source: GovInfoSecurity.

AI Inference Startup Modal Labs Reportedly in Talks at a $2.5B Valuation as Demand Shifts From Training to Serving

AI inference startup Modal Labs is reportedly in talks to raise at a valuation of around $2.5 billion, reflecting investor focus on the part of AI that actually touches users: deploying and scaling inference reliably. As enterprises move from experimentation to production, the cost and speed of inference—and the tooling around it—become major constraints.

Inference is where the economics get real. Training costs may be lumpy and headline-grabbing, but inference runs every day and scales with usage. That makes infrastructure efficiency and developer experience decisive: routing, caching, model orchestration, latency guarantees, and cost controls. Startups in this layer are competing not only with one another but also with hyperscalers that can bundle services. Modal’s fundraising discussions suggest investors believe there’s still room for specialized platforms that simplify deployment across clouds, optimize performance, and give developers a more direct path from model to production system. The implied bet: the “picks and shovels” for AI serving remain a high-growth market even as model capabilities plateau or commoditize.

Why It Matters: The next AI platform winners may be the ones that make inference cheaper, faster, and easier to operationalize.

Source: TechCrunch.

That’s your quick tech briefing for today. Follow @TheTechStartups on X for more real-time updates.