NVIDIA unveils Alpamayo, the world’s first “thinking” AI for autonomous vehicles

The moment autonomous vehicles stop reacting and start explaining themselves may have finally arrived.

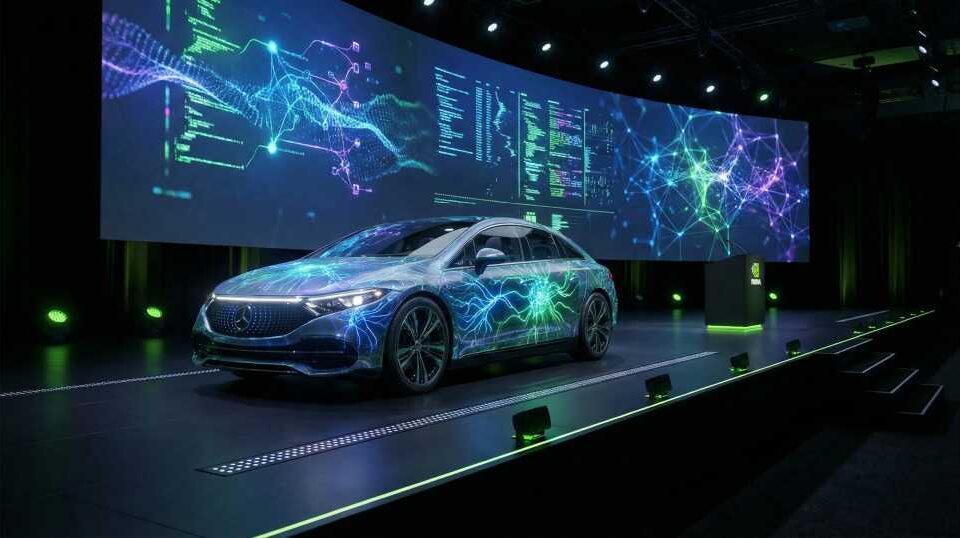

Today at CES, NVIDIA introduced Alpamayo, a new AI system that CEO Jensen Huang describes as the first autonomous-vehicle AI that can think through its actions, explain why it chose them, and show how it plans to execute them. The system is expected to reach U.S. roads later this year, starting with the Mercedes CLA.

For years, self-driving systems have relied on layered pipelines. One model sees. Another plans. A third executes. That separation has worked well in clean, predictable situations, but it has struggled when reality throws curveballs. Construction zones that do not match the map. Pedestrians who hesitate, change direction, or act irrationally. Edge cases that engineers rarely see in training data but drivers encounter every day.

What Is NVIDIA Alpamayo? The Reasoning AI Powering the Next Generation of Autonomous Vehicles

Alpamayo is NVIDIA’s answer to that long-standing problem. It replaces fragmented decision-making with a single end-to-end system that connects perception, language, and action. The idea is simple to describe and difficult to execute: let the car see the scene, reason through its implications, decide what to do, and explain the logic behind the choice.

“It’s trained end-to-end. Literally from camera in to actuation out; It reasons what action it is about to take, the reason by which is came about that action, and the trajectory.”

That reasoning layer is the fundamental shift. Alpamayo introduces Vision-Language-Action models that process video input, infer context, generate a driving plan, and attach a visible reasoning trace to each decision. Instead of a black-box outputting a steering command, developers can inspect how the system arrived at its conclusion.

At the center of the release is Alpamayo 1, a 10-billion-parameter model that NVIDIA is making openly available to researchers and developers on Hugging Face. The model ingests video, produces trajectories, and emits reasoning chains that reveal its decision path. NVIDIA says developers can distill Alpamayo into smaller runtime models or use it as a teacher model for evaluation tools, auto-labeling systems, and simulation pipelines. Open model weights and open-source inference scripts are included in the release, with larger versions planned.

This matters for safety in ways that traditional autonomy stacks have struggled to address. Rare events, often called long-tail scenarios, are where systems tend to fail silently. A reasoning model can slow down, evaluate cause and effect, and adapt when a situation falls outside prior experience. That transparency is a step toward building trust with regulators, engineers, and the public.

“The ChatGPT moment for physical AI is here — when machines begin to understand, reason and act in the real world,” Huang said. “Robotaxis are among the first to benefit. Alpamayo brings reasoning to autonomous vehicles, allowing them to think through rare scenarios, drive safely in complex environments and explain their driving decisions — it’s the foundation for safe, scalable autonomy.”

Alpamayo is not a single model release. NVIDIA is positioning it as a full ecosystem for reasoning-based autonomy. Alongside Alpamayo 1, the company released AlpaSim. This open-source simulation framework allows closed-loop testing with realistic sensors and traffic dynamics, and a large Physical AI Open Dataset with more than 1,700 hours of driving footage collected across diverse geographies and conditions. The goal is a feedback loop in which models learn, are tested in simulation, improve through data, and repeat.

Rather than running directly inside production vehicles, Alpamayo acts as a large-scale teacher. Carmakers and autonomy teams can adapt it to their own stacks, train smaller models on proprietary fleet data, and validate behavior before anything touches a real road.

That approach is drawing attention across the mobility sector. Companies including Lucid Motors, Jaguar Land Rover, and Uber have signaled interest, along with academic groups such as Berkeley DeepDrive. Many see reasoning-based autonomy as the missing piece for reaching level 4 deployment without hardcoding endless rules.

The broader implication is subtle but significant. Autonomous vehicles have logged millions of miles, yet progress has slowed as edge cases pile up. Alpamayo suggests a different path forward: fewer brittle rules, more structured reasoning, and systems that can explain themselves when something goes wrong.

If that vision holds up outside the lab, self-driving cars may soon do more than drive. They may finally be able to tell us why.

Below is a video of Alpamayo in action.

NEWS: NVIDIA just announced Alpamayo, what CEO Jensen Huang calls the world’s first thinking, reasoning autonomous vehicle AI, launching on U.S. roads later this year, starting with the Mercedes CLA.

Jensen: “It’s trained end-to-end. Literally from camera in to actuation out; It… pic.twitter.com/oqHPYLa0Xz

— Sawyer Merritt (@SawyerMerritt) January 5, 2026