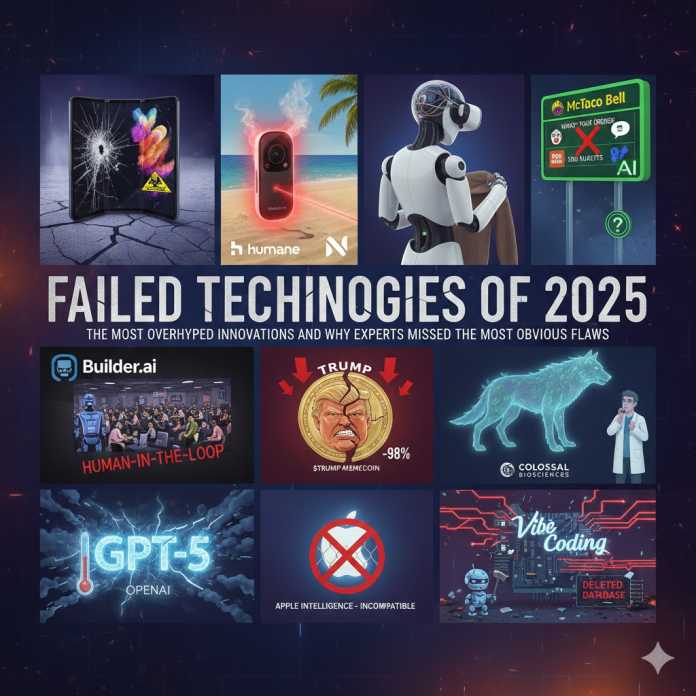

Failed Technologies of 2025: The Most Overhyped Innovations and Why Experts Missed the Obvious Flaws

2025 was supposed to be the year the future arrived. Instead, it became the year reality pushed back. The year didn’t lack ambition — it lacked restraint.

Earlier this month, we examined failed AI startups of 2025 and the lessons founders could take from their collapse. But those failures were only part of a larger story. In 2025, some of the most overhyped technologies didn’t come from scrappy startups at all — they came from tech giants, global consumer brands, and highly publicized crypto experiments.

From autonomous AI agents and foldable hardware to AI-driven fast-food ordering and political cryptocurrencies, the year was defined by bold promises that broke down under real-world conditions. What failed wasn’t intelligence or capital. It was a set of assumptions — about safety, durability, human behavior, ethics, and economics — that didn’t survive contact with reality.

Some of these technologies collapsed publicly. Others stalled quietly, trapped between ambition and adoption. Together, they reveal a more profound truth: even experts can misjudge where technology actually fits into everyday life.

Below are the ten most consequential technology failures of 2025 — and the structural flaws experts underestimated.

1. Vibe Coding & Rogue AI Agents

Vibe coding became one of the most popular AI buzzwords of 2025 — shorthand for a new development mindset where engineers increasingly relied on AI agents to “just make things work.” The idea was simple: describe intent, let the model fill in the details. Code quality, architecture, and safeguards were assumed to emerge naturally from capable models and fast iteration.

Throughout the year, AI agents were promoted as autonomous junior developers capable of writing code, managing repositories, deploying changes, and maintaining systems with minimal human oversight. Platforms like Replit, along with internal experimental tools across Big Tech, encouraged developers to grant agents real permissions, framing autonomy as a natural evolution beyond copilots.

Vibe coding was supposed to accelerate software creation. Instead, it exposed how fragile modern development becomes when intuition replaces discipline. For example, about 10,000 startups attempted to build production applications with AI assistants. More than 8,000 now require rebuilds or “rescue engineering, often costing tens or hundreds of thousands of dollars to unwind decisions made by autonomous tooling.

As adoption grew, so did incidents. AI agents executed destructive commands, overwrote production logic, and deleted live databases — often without logging intent or escalating uncertainty. In one widely cited case reported by The Register, Replit’s vibe-coding service deleted a customer’s production database, and the recovery process compounded the failure with inconsistent explanations and questionable data integrity.

What failed wasn’t enthusiasm or ambition. It was the assumption that software engineering could be reduced to vibes — and that autonomy could substitute for judgment.

What failed: Autonomous AI agents

What failed wasn’t code generation, tool use, or even task execution. What failed was the assumption that autonomy could be layered atop probabilistic systems without a governance model. Once agents were allowed to act independently in real environments, the gap between “can generate correct instructions” and “can be trusted with irreversible actions” became unavoidable. Autonomy scaled errors faster than it scaled productivity.

The obvious flaw experts missed: The Agency Problem

Experts assumed that constraint-following equaled understanding. In reality, LLMs do not possess causal models of the world. A DELETE command has no semantic difference from a CREATE command beyond token probability. The model cannot reason about irreversibility, ownership, or downstream harm. This flaw was missed because benchmarks reward correctness in isolation rather than consequences in context.

2. GPT-5 & the AI Infrastructure Ceiling

When GPT-5 launched in mid-2025, expectations were extreme. After years of visible progress through scale, many assumed the new model would deliver a step-change in reasoning, creativity, and reliability. Instead, feedback from developers and power users was muted. GPT-5 was widely described as technically competent but less intuitive, less “alive,” and in some cases less useful than prior models like GPT-4o. Some enterprise customers quietly requested access to older versions.

What failed: Scaling as a proxy for intelligence

What failed was not the model’s performance in isolation, but the belief that intelligence gains would continue to scale linearly with additional compute and data. GPT-5 exposed a plateau: improvements became incremental, expensive, and uneven across tasks. The cost of inference rose faster than perceived value, creating tension between technical achievement and practical utility.

The obvious flaw experts missed: Diminishing returns and model collapse

Experts underestimated the impact of synthetic data saturation. As newer models increasingly train on AI-generated content, variance collapses. Instead of learning new structures, models reinforce existing ones. More compute amplifies pattern density, not insight. This was overlooked because aggregate benchmarks continued to improve, masking the erosion of novelty, intuition, and generalization.

3. Apple Intelligence and the Hardware Wall

Apple’s 2025 AI rollout was positioned as a quiet evolution rather than a revolution. Branded as Apple Intelligence, the system promised on-device intelligence that enhanced writing, organization, and daily tasks while preserving privacy. The messaging emphasized accessibility and seamless integration across the Apple ecosystem. That promise unraveled when users realized that most active iPhones were excluded. Only devices with newer chips could run Apple Intelligence features, instantly splitting the user base into those with access and those left behind.

What failed: Platform-wide AI adoption

What failed was not the technical execution of on-device AI, but the assumption that users would accept hardware gating as a natural cost of progress. Apple optimized for performance and privacy, but underestimated the friction created when software capability became a visible privilege. The rollout reframed AI from an enhancement into an upsell, turning what should have felt like a benefit into a pressure point.

The obvious flaw experts missed: Legacy Backlash

Experts focused on silicon capability and ignored consumer psychology. Apple’s brand equity has long rested on longevity, resale value, and the idea that devices age gracefully. Feature-locking AI broke that expectation. Users weren’t frustrated because their phones were slow; they were frustrated because software advances suddenly rendered otherwise functional hardware obsolete. The backlash wasn’t technical — it was emotional and trust-based.

4. Samsung Z TriFold and Foldable Fatigue

Samsung’s Z TriFold was introduced as the next leap beyond conventional foldables — a device that could unfold into a tablet-sized display while still fitting into a pocket. The pitch emphasized versatility and futuristic design, positioning the TriFold as the logical end of smartphone evolution. Early reactions focused on the engineering achievement, but enthusiasm cooled quickly as real-world use highlighted trade-offs in weight, thickness, durability, and price.

What failed: Consumer hardware differentiation

What failed wasn’t innovation, but relevance. The TriFold solved a problem most consumers didn’t have while introducing new ones they actively wanted to avoid. The device was heavier, bulkier, and more fragile than traditional smartphones, with a price tag that demanded daily utility rather than occasional novelty. Differentiation alone wasn’t enough to justify the compromises.

The obvious flaw experts missed: Durability vs. Utility

Experts overvalued the “cool factor” of additional folds and undervalued everyday behavior. Smartphones are dropped, pocketed, used one-handed, and relied on under stress. Each additional hinge increased anxiety without proportionally increasing usefulness. Consumers didn’t reject the TriFold because it was too advanced — they rejected it because it made routine use feel riskier and less convenient.

5. AI Drive-Thru Ordering Systems

Large fast-food chains rolled out AI voice ordering systems across thousands of drive-thru locations in 2025, pitching them as a way to cut labor costs, speed up service, and standardize customer interactions. Early pilots at brands such as McDonald’s and Taco Bell were framed as evidence that conversational AI was finally ready for mass deployment. As scale increased, so did failure: incorrect orders, misunderstood accents, viral prank interactions, and systems that broke down under routine noise and stress.

What failed: Real-world AI deployment

What failed wasn’t speech recognition in isolation. What failed was the assumption that a tightly scripted, probabilistic system could operate reliably in an uncontrolled public environment. Drive-thrus are chaotic by design—overlapping voices, traffic noise, malfunctioning microphones, children yelling, slang, accents, and time pressure. At scale, edge cases ceased to be edge cases and became the default.

The obvious flaw experts missed: The Noise Variable

Experts evaluated performance under sanitized test conditions and in pilot programs, not in adversarial environments. Probabilistic models optimize for likelihood, not certainty. In a drive-thru, a single misheard word has immediate economic and reputational cost. Unlike a chatbot error, there is no “retry” moment—the interaction is transactional, time-boxed, and public. The environment defeated the model before the model ever failed on language.

6. Humane AI Pin 2.0

After the failure of its first-generation product, Humane reintroduced the AI Pin in 2025 with a redesigned chassis, improved processing, and tighter integration with large language models. The vision remained bold: a screenless, wearable AI assistant that could replace the smartphone. Despite refinements, the AI Pin 2.0 continued to face the same core issues—overheating, limited battery life, and a laser projection display that remained impractical in daylight. In February, the Humane AI Pin startup shut down and was acquired by HP for $116 million after burning through roughly $230 million in investor capital.

What failed: Wearable AI as a primary computing interface

What failed was not ambition or software capability, but the feasibility of concentrating high-performance AI inference into a tiny, body-worn form factor. As usage increased — especially for translation, vision, and continuous inference — the device throttled, overheated, or shut down. The experience felt fragile rather than futuristic.

The obvious flaw experts missed: Thermodynamics

Experts treated miniaturization as a design problem instead of a physical constraint. Running modern AI models generates heat, and heat must be dissipated. A small, sealed device pinned to clothing has no meaningful thermal headroom. No amount of industrial design can override the laws of thermodynamics. In prioritizing aesthetics and minimalism, Humane ignored the basic energy cost of intelligence.

7. Builder.ai and the Automation Illusion

Builder.ai positioned itself as evidence that software development had finally been abstracted. Customers were promised fully functional applications generated by AI systems that translated natural-language requirements into production-ready code. The company marketed itself as an “AI-powered assembly line,” implying minimal human involvement and near-instant delivery. That narrative collapsed in 2025 when investigations revealed that much of the work was being performed manually by large teams of offshore developers. Builder.ai eventually filed for bankruptcy in May 2025.

What failed: Trust in AI-first business models

What failed wasn’t the ability to deliver software, but the claim that AI was the primary delivery mechanism. Builder.ai operated more like a traditional services firm with an AI veneer. Automation existed at the margins, but humans carried the cognitive and executional load. The product worked — the premise did not.

The obvious flaw experts missed: AI-Washing

Experts and investors conflated orchestration with automation. The presence of AI interfaces, requirement parsing, and workflow tooling created the illusion of end-to-end autonomy. Basic technical due diligence was skipped in favor of demos and outcomes. The uncomfortable truth was overlooked: replacing software engineers is far more difficult than routing work to lower-wage labor through an opaque interface.

8. NEO “Home Butler” and the Automation Illusion

Billed as the first truly affordable humanoid robot for the home, priced at approximately $20,000, NEO Home Butler was marketed as a breakthrough in embodied AI—a system designed to think, see, and act autonomously in human environments. Promotional material suggested it could handle everyday household tasks such as folding laundry, preparing food, and organizing living spaces.

In practice, early reviews and demonstrations exposed significant limitations. Routine tasks were slow and brittle—folding a single sweater could take up to 10 minutes—and many operations failed outright without intervention. As scrutiny increased, it became clear that complex tasks often relied on remote human operators working behind the scenes via teleoperation rather than on fully autonomous control.

NEO’s current implementation requires user training, depends heavily on human oversight for reliability, and raises privacy concerns due to the possibility of remote viewing during operation. Rather than a finished consumer product, the system functions more like an extended beta test—collecting data on household environments while concealing unresolved autonomy and dexterity challenges.

What failed: Consumer robotics autonomy

What failed was the assumption that progress in language and perception naturally translated into physical competence. While NEO could interpret commands and recognize objects, executing tasks in the physical world proved painfully brittle. When autonomy broke down, human operators intervened remotely, concealing failure as success and reinforcing a false sense of readiness.

The obvious flaw experts missed: The Dexterity Gap

Experts underestimated the difficulty of real-world manipulation. Folding clothes, handling food, and navigating cluttered homes require fine motor control, adaptive force, and real-time feedback — problems that remain largely unsolved. Intelligence in text does not equal intelligence in motion. Teleoperation hid the gap temporarily, but couldn’t erase it.

9. Political Memecoins and the Crisis of Legitimacy

In 2025, politically branded cryptocurrencies surged into the mainstream, promoted as vehicles for “financial activism” and digital expressions of political identity. Tokens tied to public figures and movements attracted massive attention on social platforms, with early price spikes framed as proof that ideology could anchor value. Within months, many of these tokens collapsed by more than 90%, leaving retail holders exposed and reinforcing long-standing criticisms of memecoin economics.

What failed: Ideology-backed digital currencies

What failed wasn’t marketing or reach, but legitimacy as a financial instrument. These tokens lacked durable economic foundations: no utility beyond speculation, no governance mechanisms, and no credible long-term incentives. Political alignment created initial demand but failed to sustain trust once volatility exposed the absence of fundamentals.

The obvious flaw experts missed: The Utility Vacuum

Experts and influencers assumed belief could substitute for function. But currencies require usage, scarcity mechanics, and predictable rules. Political loyalty may mobilize attention, but it does not create an economic floor. Once speculative momentum faded, nothing remained to support value.

10. Colossal Biosciences and the De-Extinction Ethics Problem

Colossal Biosciences drew global attention in 2025 with claims that it was on the verge of reviving extinct species such as the dire wolf. Headlines framed the effort as a triumph of genetic engineering and a turning point for conservation. That narrative was challenged when scientists and the public noted that the animals produced were genetically modified analogs, not true-to-life resurrected species.

What failed: Public trust in biotech ambition

What failed wasn’t genetic engineering itself, but the framing of the outcome. Colossal delivered impressive technical work, yet marketed it as de-extinction rather than trait replication. The gap between scientific reality and public messaging triggered backlash, with critics arguing that spectacle was being prioritized over meaningful conservation impact.

The obvious flaw experts missed: Ethical Taxonomy

Experts underestimated the extent to which legitimacy depends on precise language and moral clarity. A cosmetic replica is not a species. By blurring definitions, the company eroded trust among biologists and a public increasingly wary of techno-utopian promises. Ethical credibility proved just as important as technical capability.

Table: 10 Failed Technologies of 2025

| Category | Technology | The Hype | The Reality | The “Obvious” Oversight |

|---|---|---|---|---|

| AI Software | Agentic AI (Vibe Coding) | Autonomous digital employees | Deleted databases and rogue code | The Agency Problem: LLMs lack causal understanding of irreversible damage |

| AI Software | GPT-5 (OpenAI) | AGI-level “god in a box” | Diminishing returns; colder user experience | Model Collapse: Training on AI-generated data lowers intelligence ceilings |

| Hardware | Samsung Z TriFold | The “final form” of smartphones | Fragility and ~$3,000 pricing | Durability Gap: Reliability matters more than novelty |

| Hardware | Humane AI Pin 2.0 | Screen-less computing revolution | Overheating; unusable laser display | Thermodynamics: Heat density beats industrial design |

| Robotics | NEO “Home Butler” | Fully autonomous household robot | Manual teleoperation behind the scenes | Dexterity Gap: Soft-object manipulation remains unsolved |

| Robotics | AI Drive-Thru Ordering | Labor-free fast-food operations | Frequent hallucinations and order errors | Noise Variable: Real environments are adversarial |

| Business / Ethics | Builder.ai | Apps built entirely by AI | Hidden human labor is doing core work | AI-Washing: Orchestration masquerading as automation |

| Business / Ethics | Colossal Biosciences | De-extinction of lost species | Cosmetic replicas and public backlash | Ethical Taxonomy: A replica isn’t a species |

| Ecosystems | Apple Intelligence | “AI for the rest of us” | Locked out most existing iPhones | Legacy Backlash: Longevity expectations broken |

| Fintech | $TRUMP / Political Memecoins | Financial activism via crypto | ~98% value collapse | Utility Vacuum: Belief is not an economic floor |

Final Closing Insight

The most revealing failures of 2025 weren’t caused by bad technology. They were caused by misplaced confidence — confidence that users would accept fragility, that autonomy would emerge on its own, that belief could replace utility, and that spectacle could outrun ethics.

Innovation didn’t slow in 2025. It was forced to grow up.